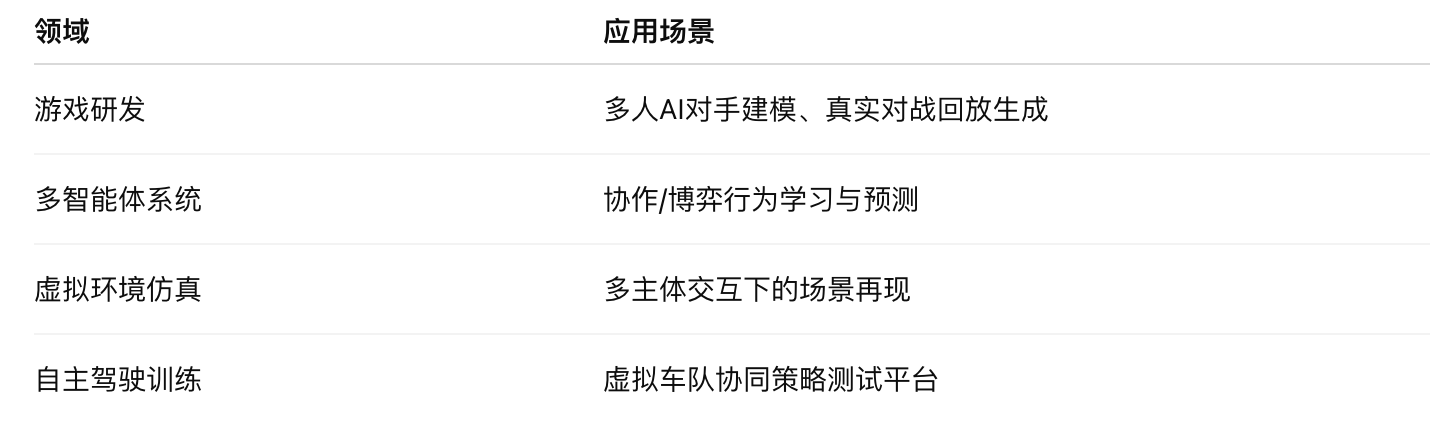

- The core technical architecture details

- # The basic structure of the single-man world model (Baseline)

- Multiverse: Structural innovation in multi-person models

- Enter data structure design: the way the visual perspective is integrated

- Long time sequence modelling and context optimization

- # Question: Multi-car interactive physical phenomena (e.g. relative movement, brake effects)** slowly changing but critical** require longer windows.

- Data set construction and training process

- Game platform selection: Gran Turismo 4 (GT4)

- # # data acquisition strategy

- # Automation generation mechanism

- Research values and application prospects

- Core breakthrough:

Enigma Labs launched Multiverse, a ground-breaking AI-generated multiplayer game that allows players to build and influence the world together in real time. The model aims to address the limitations of existing world models that can only simulate a “single subject” and to construct a structure that supports ** multi-agent (multi-agent) perception, prediction and interaction in a shared environment**. The entire project cost only $1.5K, but major innovations in architecture, data collection and model design have been fully opened up.

-

** Multiple interactions**: multiple players can enter this world that is simulated by AI at the same time, and ** everyone’s behavior affects the world in real time. ** For example, you accelerate, drift, overcarriage, and make the world change.

-

** Cost-effective: They spent less than **$1,500 to complete training and research and development and can run on regular PCs. The secret is not a lot of math, but technological innovation.

-

** Complete open source**: they open up the entire project to code, data, model parameters, architecture, research results so that anyone can learn, use or improve.

-

** Use is not limited to games**: this “manual world model” means more than games, but can also be applied in a broader simulation environment, such as robotic training, AI collaboration, etc.

The core technical architecture details

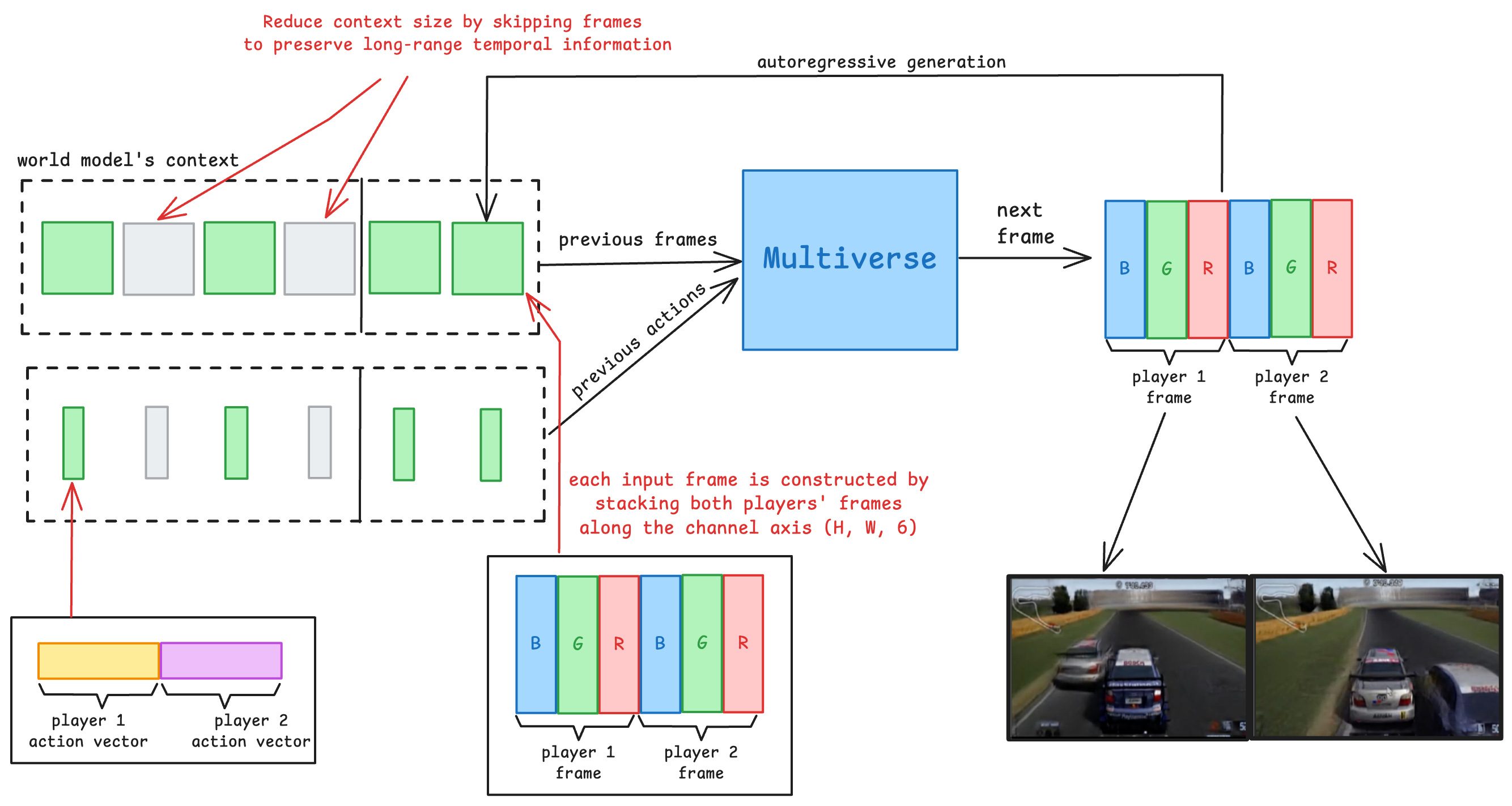

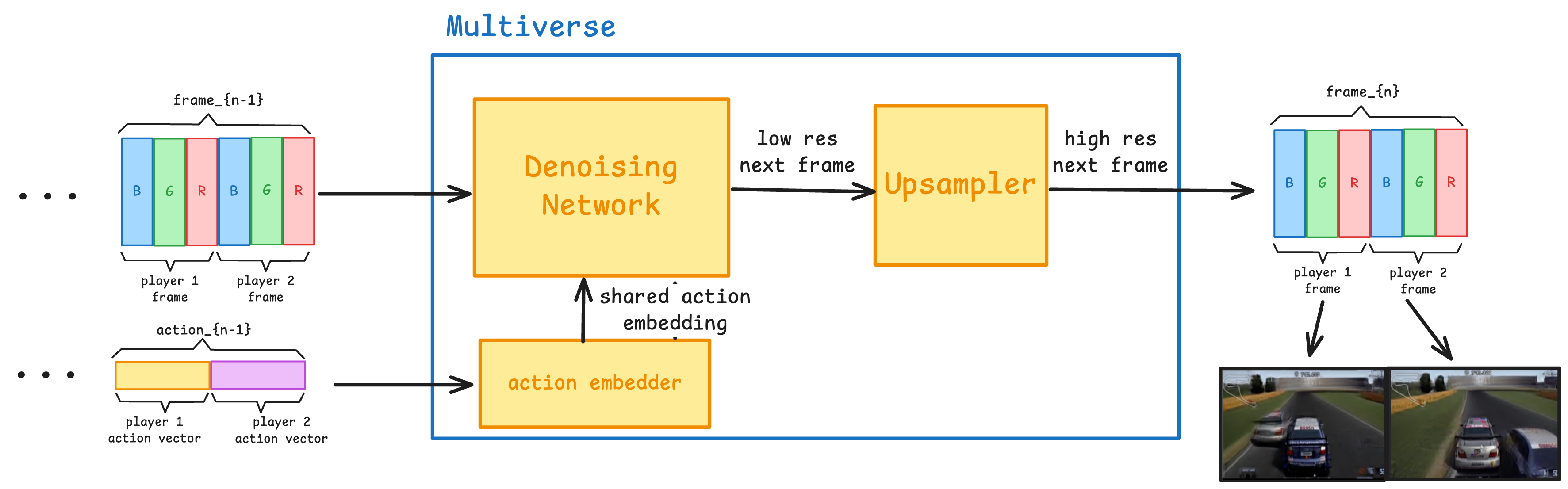

# The basic structure of the single-man world model (Baseline)

The traditional single-person world model usually consists of three components: Action Embedder converts the player input (e.g. key) to a vector expression. ** Denoting Network** based on a Diffusion model, using input actions and previous frames of video to predict the next frame. ** Up sampler (Uppsampler)** enhances low-resolution images to generate high-resolution output.

Multiverse: Structural innovation in multi-person models

Multiverse redesigned the structure to fit multiple scenes:

-

** Double input action encoder**: to receive control input from both players and to construct a combined vector.

-

** Shared Noise Network**: In a unified model, create video frames under two player perspectives and ensure time and space consistency.

-

** Parallel top sampler**: two low-resolution frames are sampled separately, but consistent image style and dynamic information.

Enter data structure design: the way the visual perspective is integrated

In order to achieve shared perception, the team has tried two ways of visual integration:

Split-screen: As with traditional split-screen games, two images are collated up and down.

** Channel Track integration: stacks two frames of images along the RGB channel dimensions (i.e., converts each pixel into six channels).

Long time sequence modelling and context optimization

# Question: Multi-car interactive physical phenomena (e.g. relative movement, brake effects)** slowly changing but critical** require longer windows.

-

** Short-term dynamics (e.g. brakes)**: enough modelling with 8 frames (0.25 seconds).

-

** Long-term interactions (e.g., overcarriage, collisions)**: 0.5 to 1 second or even longer span is required.

Solution: ** Frog time sampling + tier prediction**

-

Use the most recent 4 frames + take 1 frame per 4 frames (8 frames in total) to get a longer sense without increasing the visible pressure.

-

Introduction of Curriculum Learning (course-based training): training for short-term predictions leading to a gradual transition to a maximum of 15 seconds of projection, balancing learning efficiency with expression skills.

Data set construction and training process

Game platform selection: Gran Turismo 4 (GT4)

-

Use of the Tsukuba Circuit scene to facilitate modelling and recurrence.

-

Game origin does not support 1v1 mode, team by reverse engineering forced start of real 1v1.

# # data acquisition strategy

-

Each game records a double viewing (per player’s perspective), synthesizing at a later stage.

-

Controlling input (barrells, brakes, diversions) to read HUD elements** via a computer visual reading, without manual recording.

# Automation generation mechanism

-

Generate available video data using the B-Spec mode (AI driver)+Script to send control command batches in GT4.

-

Experimental access to the OpenPilot autopilot model is controlled, but ultimately B-Spec is selected for stability and resource optimization.

Research values and application prospects

Core breakthrough:

-

Modelling of a world of coherence in a multi-faceted perspective** (synchronized multi-perspective development).

-

Introduction of efficient training strategies and replicable data pipelines to support the opening up of community recovery and validation.

Potential applications: