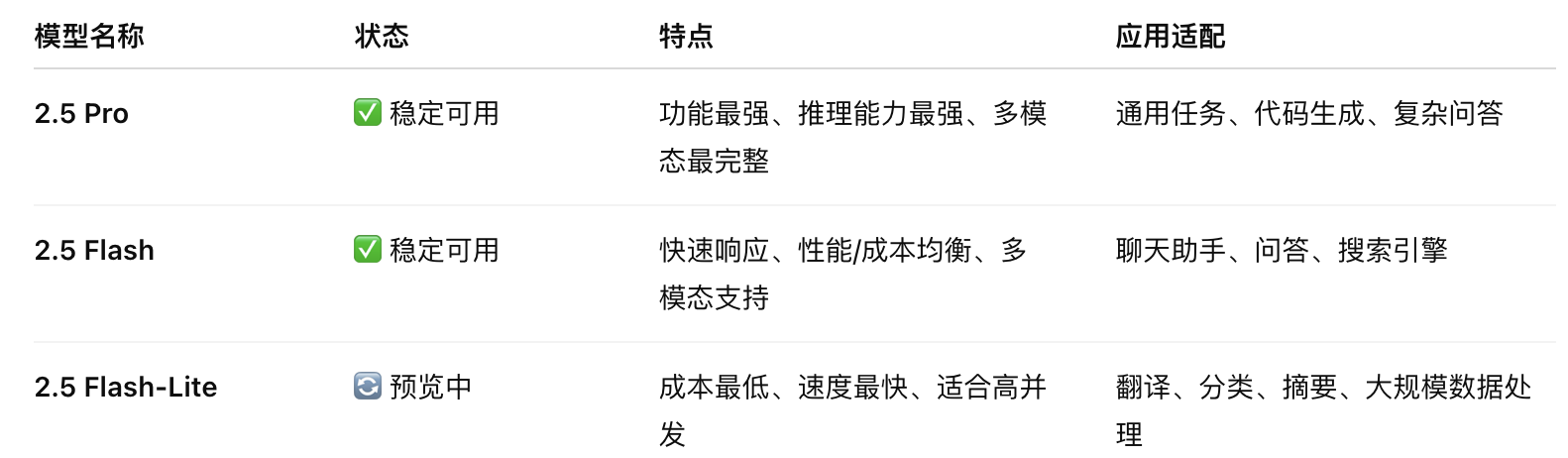

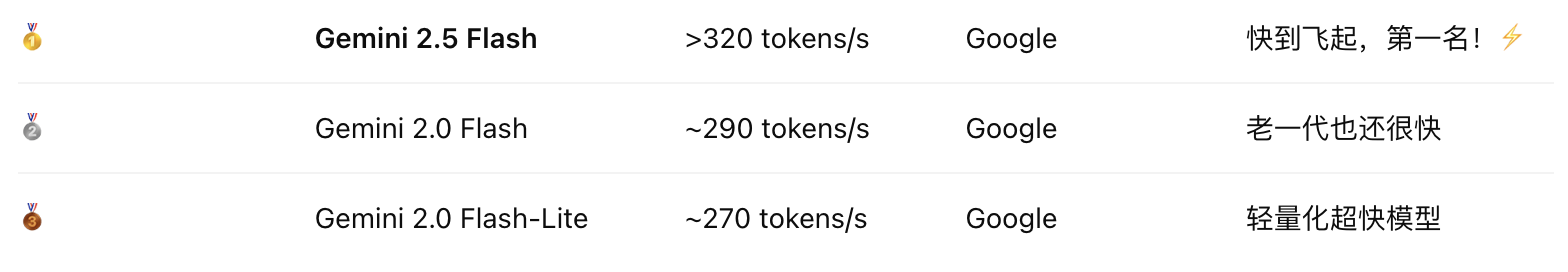

- A comparative overview of the three models

- Details of Gemini 2.5 Flash-Lite

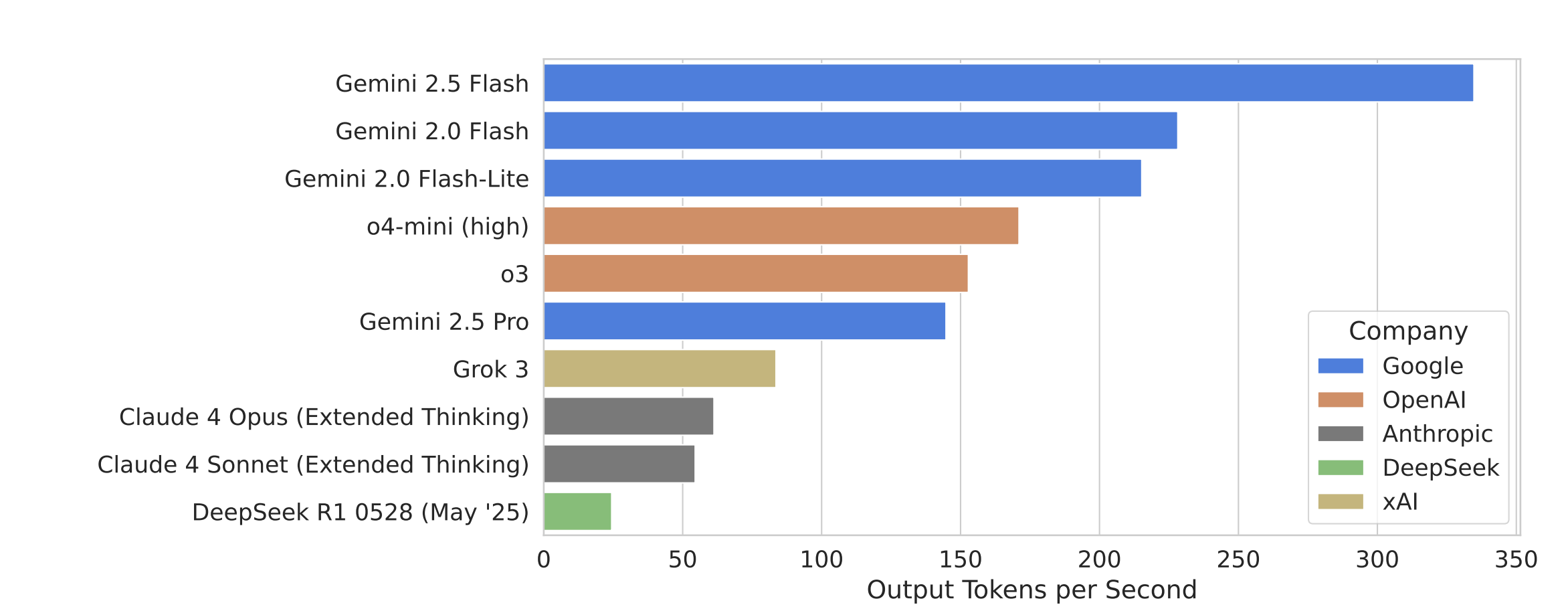

- 4. ** Performance comparison**:

- # “Who’s the most cost-effective of the AI model”

- Application case demonstration:

- ** How to use **:

Google officially publishes Gemini 2.5 Flash and Pro models, which can now be used by any developer to construct and expand the AI applications that can be put into production.

-

In parallel with the launch of the new model Gemini 2.5 ** Flash-Lite (prevary version)**: This is the fastest-response and least-cost model in the Gemini 2.5 series.

-

Particularly applicable to practical applications where low-delayed and efficient responses are required.

A comparative overview of the three models

Details of Gemini 2.5 Flash-Lite

- The most cost-effective and fastest.

-

Compared to the Pro model,** the reasoning is faster and less costly**.

-

Lower than 2.0 Flash-Lite and 2.0 Flash ** Delay** and faster reasoning;

-

The cost of each call is significantly reduced and suitable for deployment in large-scale systems or user scenarios.

-

Light solutions for ** edge devices, mobile terminals, microservices**.

- Quality enhancement in basic tasks Flash-Lite overstepped the old version on several standard tests:

-

** Code generation**

-

Mathematic/logical reasoning

-

The scientific understanding mission

-

** Text/image multi-model input parsing**

The performance is more balanced and comprehensive than 2.0 Flash-Lite.

#3 3. Support Gemini 2.5 full functionality These include:

- Controllable Thinking Gemini 2.5 Flash is one of the first models to support “thinking about the budget”:

-

Users can set a reasoned calculation budget (e.g. the Token number) with a flexible trade-off between response speed and accuracy.

-

Models can use more forward transmission steps to improve accuracy when dealing with complex tasks (e.g. mathematical questions, code generation).

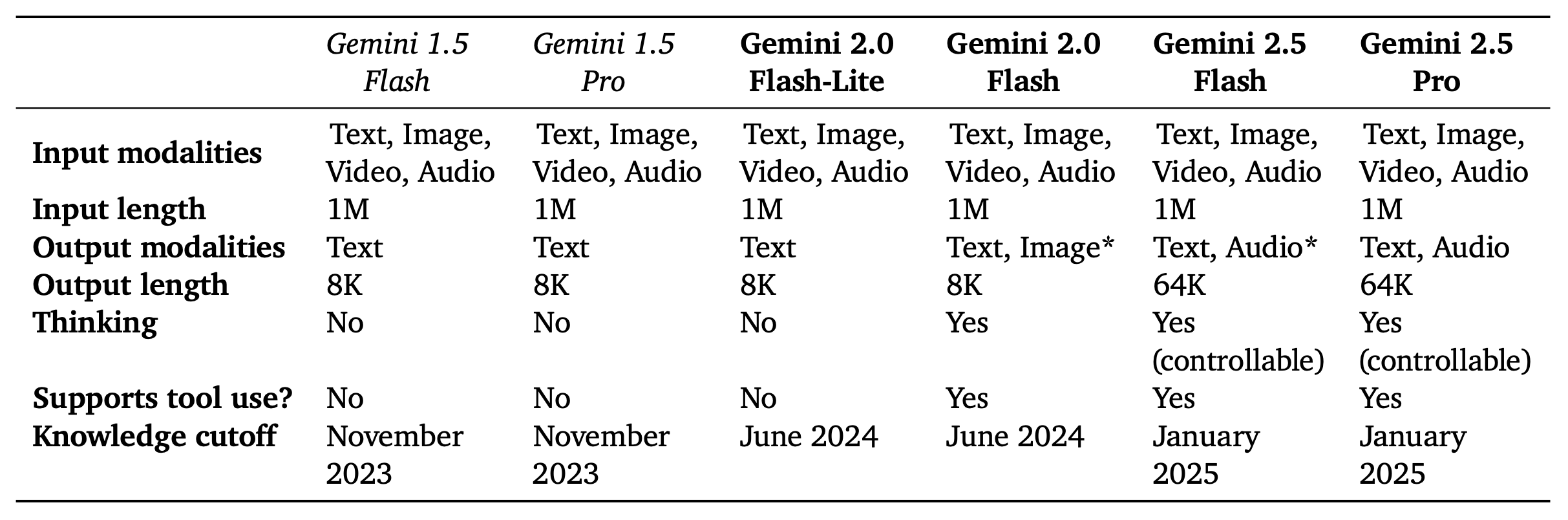

- ** Multi-module treatment** Although not pro-version, it still has the full range of original multi-model support:

-

Type of input includes: text, image, audio, video.

-

Capable of processing hour video content, structured images (e.g. graphs, UI interfaces) and voice.

-

Support the task of extracting events, identifying scenes, generating applications or summaries from videos.

- Tool-use: for example, to call Google search, code run, etc., and have million token context window, the same level as Gemini 2.5 Flash and Pro.

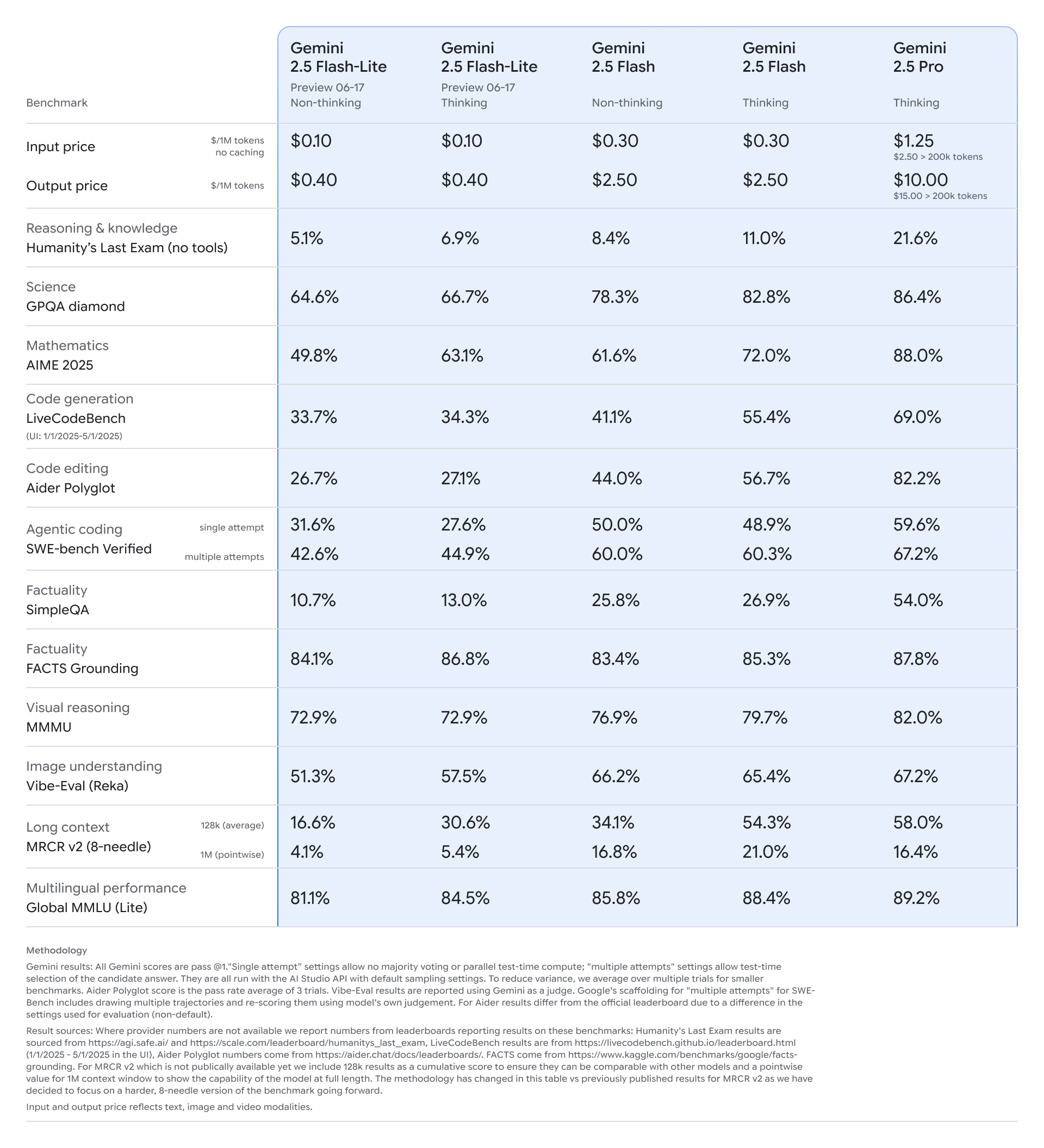

4. ** Performance comparison**:

2.5 Flash-Lite is a full leader in response speed, reasoning quality compared to the old version 2.0 Flash-Lite and Flash.

-

Obtaining higher scores in baseline tests such as coding, mathematics, science, reasoning and multimodules.

-

Delays and costs are lower and are one of the most valuable models at present.

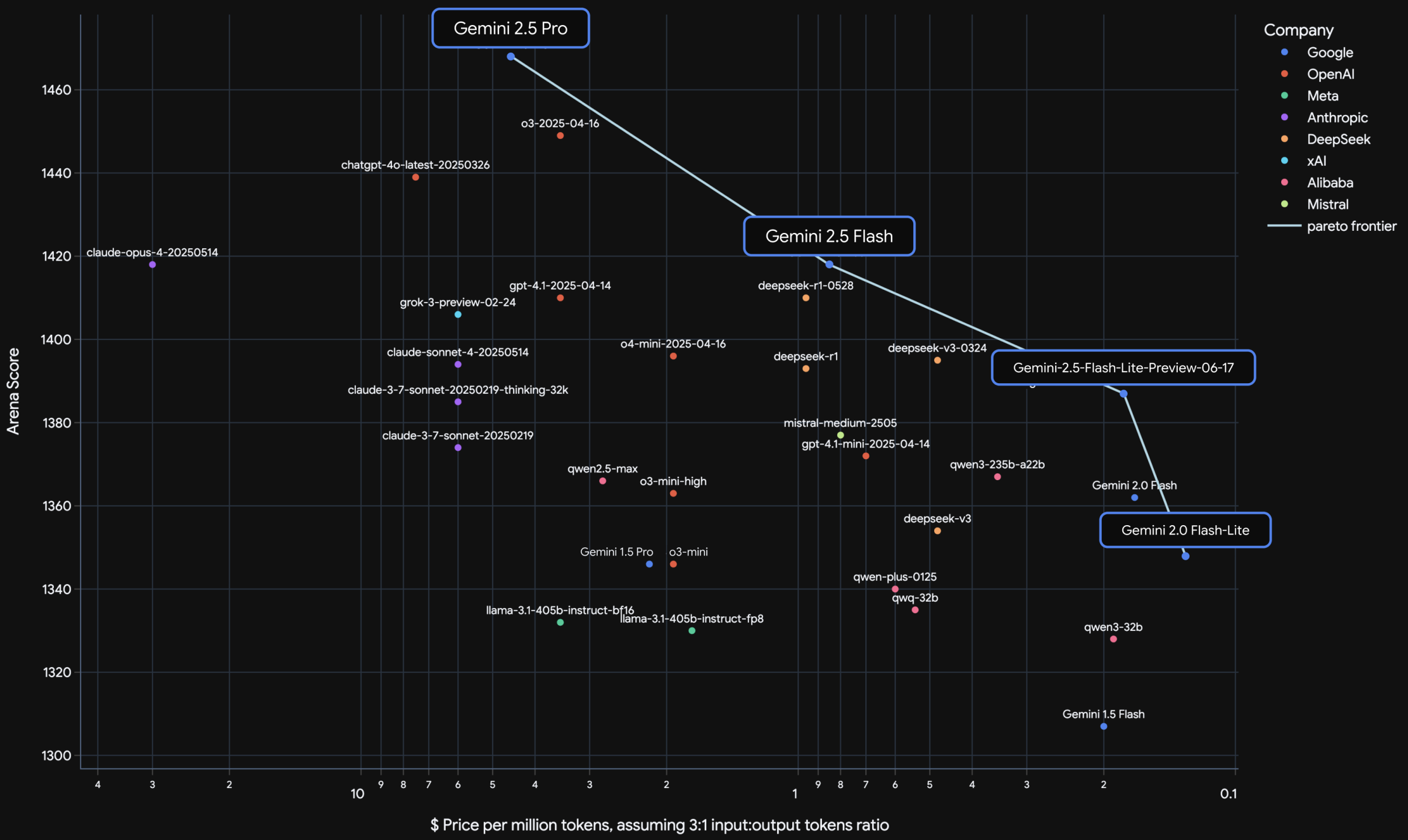

# “Who’s the most cost-effective of the AI model”

-

The Gemini 2.5 series significantly increased the upper limit of value for money and pushed Pareto as a whole to the top right corner.

-

Flash-Lite is currently one of the most cost-effective models, especially for budget-sensitive applications that still require strong modelling capacity.

-

** “Thinking” + Long context + multi-model”** is fundamental to the leadership of the Google Gemini series.

♪ How do you look at this picture? ♪

-

**Honger axis (up as high as possible): ** How smart and competent models are, for example, in terms of their ability to understand, write codes, watch videos, etc.

-

** Transverse (advanced by right): ** How much it costs to use this AI model at a time (cost of processing 1 million words).

** Top left: Smart but expensive Top right**: Smart and cheap! The blue curve ** is: a line linked to “the world’s most cost-effective model” ** also known as “Pareto Frontier”. Gemini 2.5 Pro

-

Googles now ** the strongest AI brain**, smart as a watch.

-

For example, you can read three hours of video, you can write web applications, you can read Harry Potter at once.

-

Weaknesses: Precious! Suitable for scientific research and heavy development.

Gemini 2.5 Flash

-

Smaller models,** smarter than GPT-4o, but cheaper**.

-

Balanced: fast, cheap and reflective, suitable for chat robots, customer service, search and question systems.

Gemini 2.5 Flash-Lite (new release!)

-

Super-high-priced “Quick Knife Little AI”: especially low-cost, but still smart!

-

For example, if you have thousands of customers who have to answer questions with AI at the same time, it’s perfect.

-

It is represented by the “top right corner” in the figure:** the cheaper the right, the smarter the higher and the better the better.**

Application case demonstration:

-

A research prototype: After the user uploads large PDF files, the Flash-Lite model can be converted into an interactive Web application in real time to facilitate understanding and synthesizing complex content.

-

The example highlights the strong capacity of the model in dealing with complex structured information.

** How to use **:

-

All 2.5 Flash and Pro models can be used on the following platforms: Google AI Studio

-

Google Cloud Vertex.

Fit for rapid deployment of AI tools and services by businesses and researchers. Technical report: https://storage.googleapis.com/deepmind-media/gemini/gemini_v2_5_report.pdf