- ComfyUI original API node?

- The model currently supported is a look at API

- #

- Details of how to use the workflow

- # Use the process:

- #

- First introduction of VIDEO type native support

- A new upgrade of visual brands

- What’s the point of summing up?

- Who’s fit for these functions?

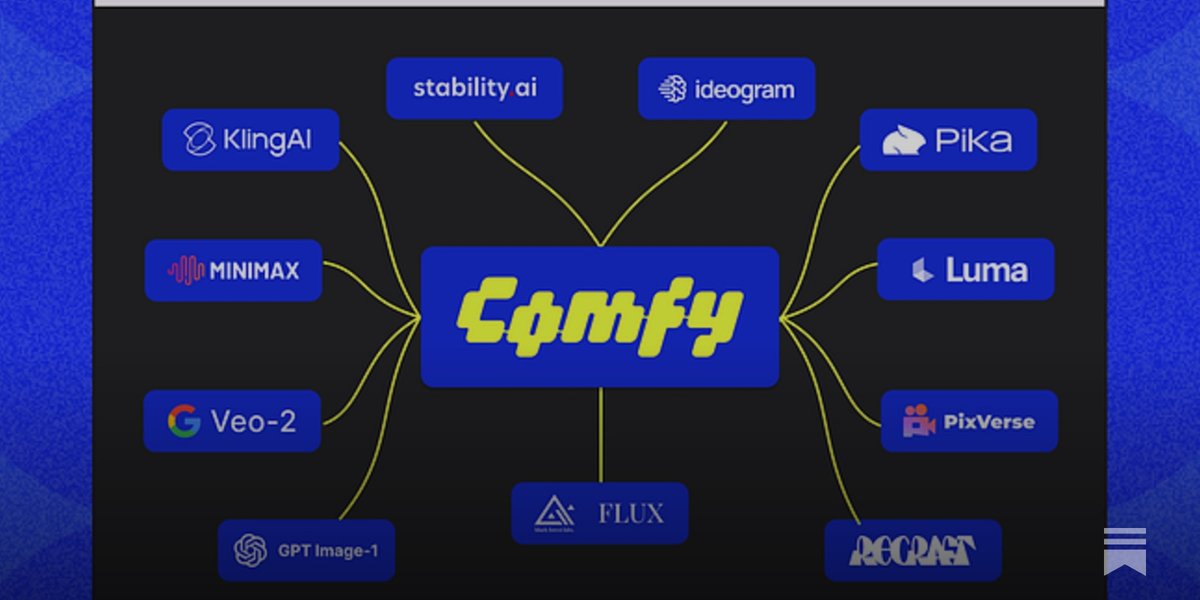

ComfyUI has launched Native API Nodes which allows users to ** call multiple payment models API** directly in the workflow, e.g. Google Veo2, OpenAI GPT-4o image, Stability AI, Luma, Recraft, Pika 2.2, PixVerse, Ideogram etc. 11 model series, 65 nodes. This means that users can call different models, such as images, video generation, text-to-videos, in parallel to the same ComfyUI process** without exiting the interface and organizing the generation tasks.** In addition, ComfyUI supports:

-

** Users bring their own API key** (reuse existing platform accounts);

-

** Parallel execution of API request** (accelerated multi-model call speed);

-

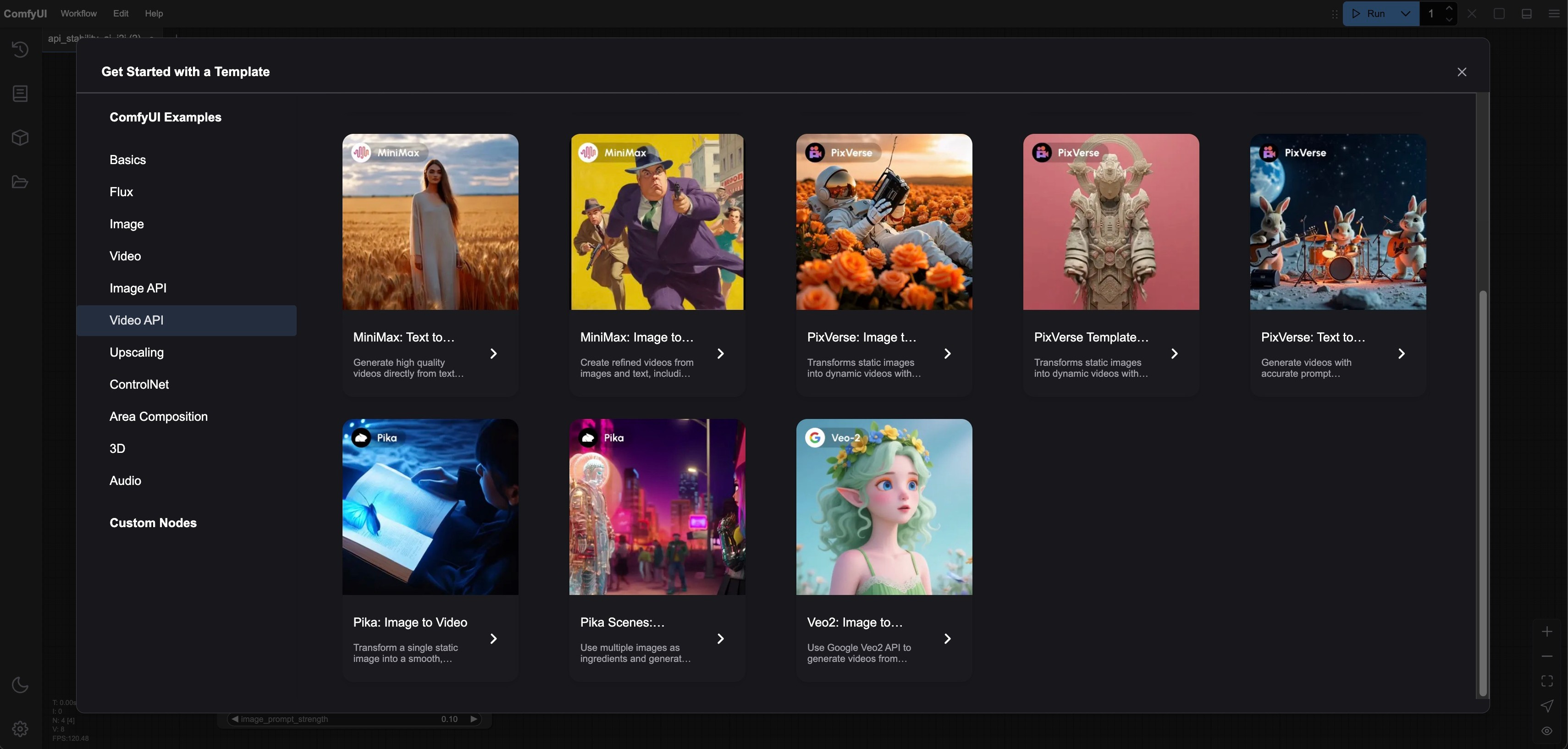

** First-time support for VIDEO type** (original video generation task);

-

new UI visual brand upgrade (in the 1990s, moving + Y2K technology wind);

All API node functions are ** optional** and the ComfyUI body is still ** completely free of charge**.

ComfyUI original API node?

ComfyUI used to be a graphical node-driven local image-generation tool, widely used in the Stable Diffusion community. However, this update introduced a completely new component: ** Original API Node: ** Allows users in ComfyUI** to call directly multiple mainstream APIs without leaving the interface or writing codes. This means: You can use a flexible mix of business models (image/video/multi-model)** in a workflow, unify scheduling and generation, and significantly improve creative efficiency.

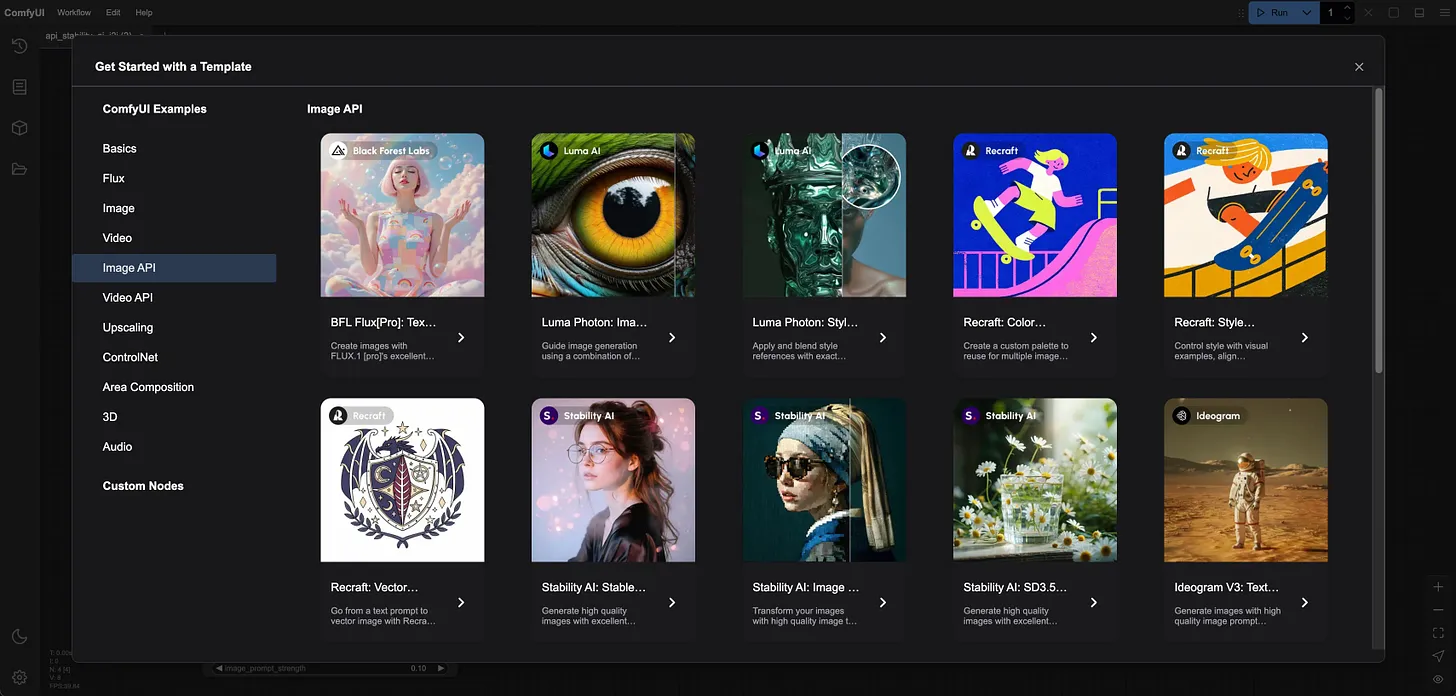

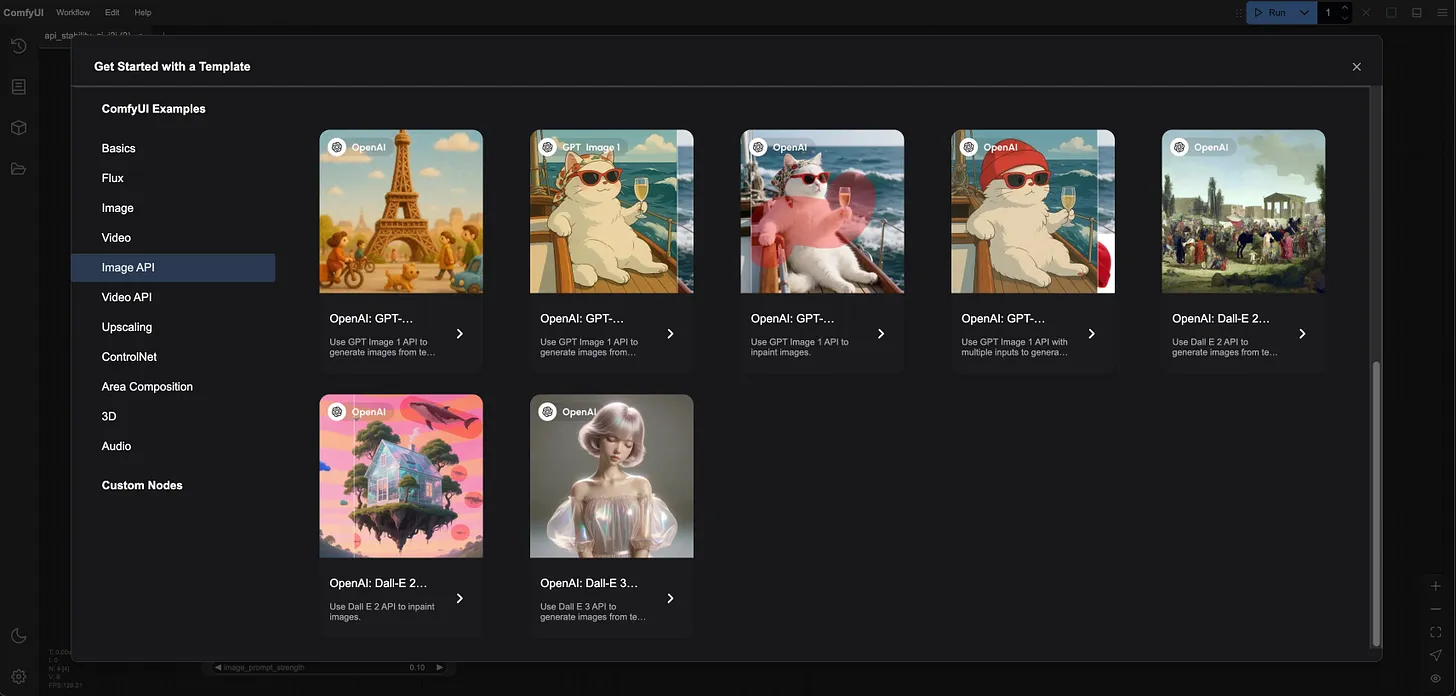

The model currently supported is a look at API

ComfyUI Adds primary access support to the following 11 model series totals 65 nodes covering multi-model tasks such as image, video, text-to-video, image-to-video.

#

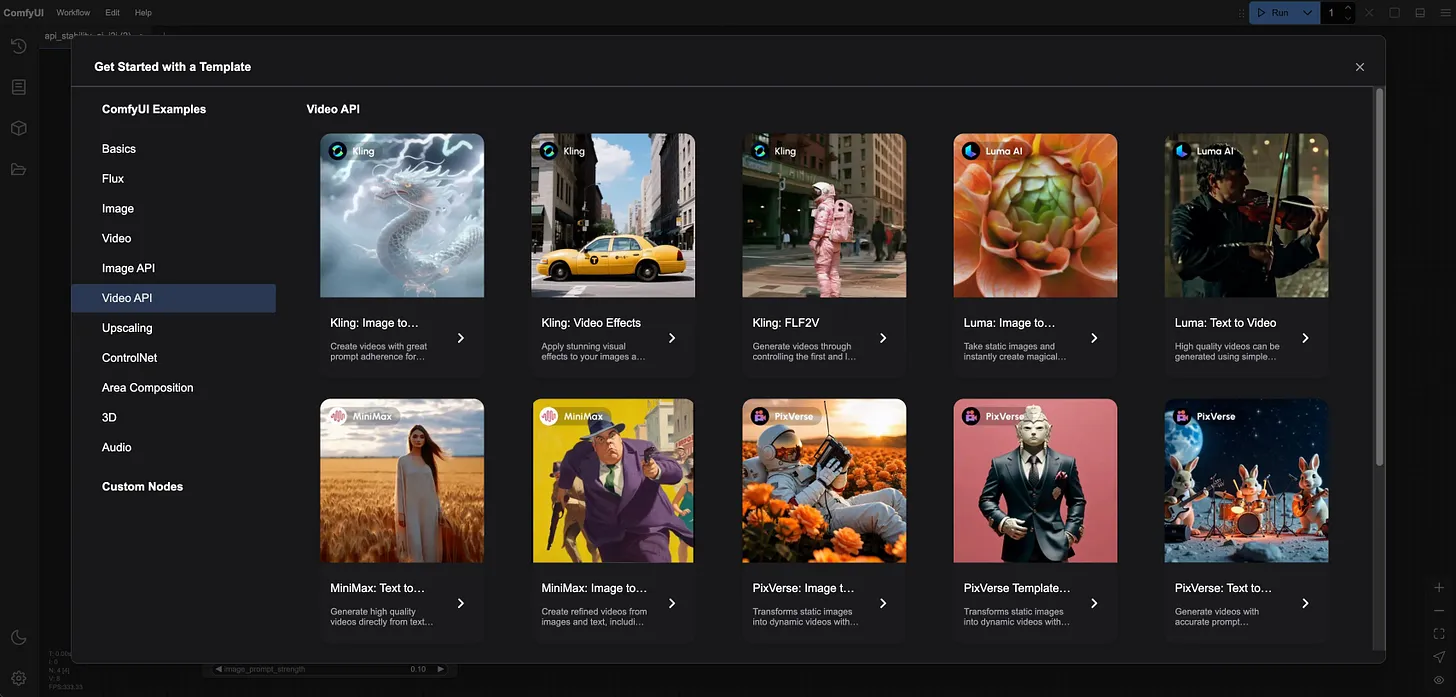

Details of how to use the workflow

# Use the process:

update ComfyUI or ComfyUI Desktop

Login / Register account, purchase or bind API fractions of an existing platform

Open: Workflow BrowseTemplates Image API / Video API

Select template to run directly

#

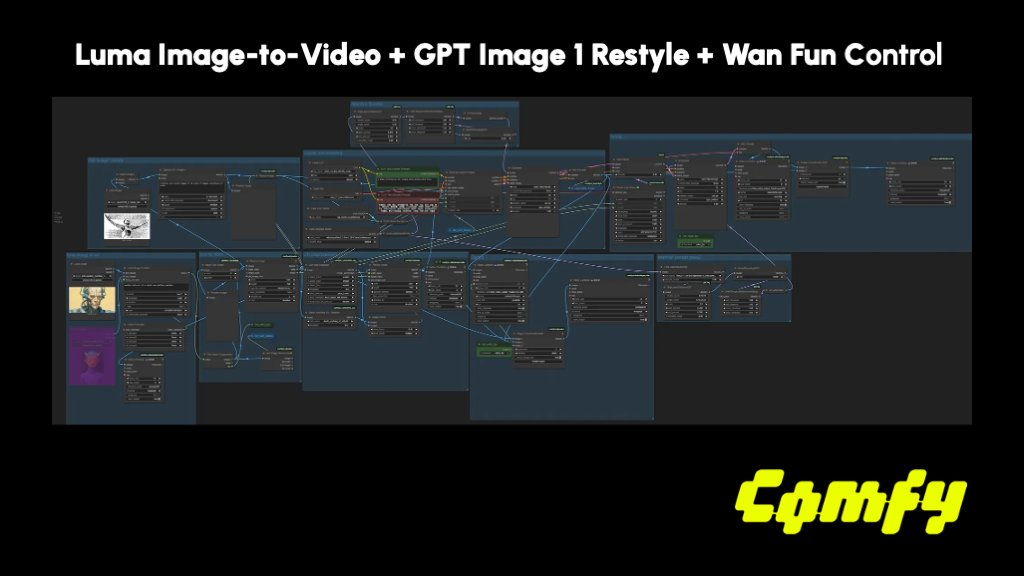

When calling multiple external APIs (e.g. image+video+subtitles), ComfyUI automatically executes different nodes in parallel, significantly increasing overall generation speed. Examples of multi-model collaboration:

- Text GPT-Image Generate Person sketches

Luma Photon, plus real sense of light.

-

Scene + Prompt PixVerse Generate Video Snippets

-

Video + Description Pika Generate Animated White Camera

First introduction of VIDEO type native support

This is the first time that ComfyUI supports a “video generation” type of node (VIDEO type), meaning that:

-

It is no longer limited to image missions;

-

Support for video-image combination processes;

-

To support cross-model collaboration (e.g. image-to-video, video-generation re-engineering of segments, etc.);

-

laying the foundation for the next audio/3D extension.

A new upgrade of visual brands

In addition to technical upgrades, ComfyUI has officially launched a brand-new visual system:

-

Logo: consists of several modules that respond to the node graphical workflow;

-

** fonts and colours: ** joined 90-year kinetic style + Y2K digital sense;

-

** Expression of ideas: ** Retains the community spirit of “free, accessible, hacking”, emphasizing that instrumentality coexists with open creativity.

Officially, “What we want to convey is that ComfyUI is still free and open, but it has also become a powerful tool that can really be used in the production process.”

What’s the point of summing up?

- ** Uniform multi-model access platform** Integration of multiple mainstream API calls in a workflow will no longer be required to switch platforms and manually upload download materials.

- ** Free body + optional commercial API** The ComfyUI main body is still open and free of charge, and the API node is used on demand. ** “Multi-modular generation tubes” for real creators** Images, videos, text, structure data, styles are integrated and it will be possible to add audio/3D nodes in the future.

Who’s fit for these functions?

-

creators of graphic content (e.g. microblogging, public numbers, short video drawings)

-

AI Video Editor (Users of Pika, Veo2, PixVerse)

-

Stable Diffusion Advanced User (wanting to mix other models)

-

Designer/advertisement creator (image mix generation)

-

Educational content developer (video transfer, video with AI animation)

-

Product manager/developer (building AI workflow prototype)

Official presentation: https://blog.comfy.org/p/comfyui-native-api-nodes