- Video comprehension demonstration

- # 1. ** Video to Learning Application (Video App)**

- 2. Video p5.jsAment

- # 3. Moment Realeval

- 4. Temporal reasoning

- How to use

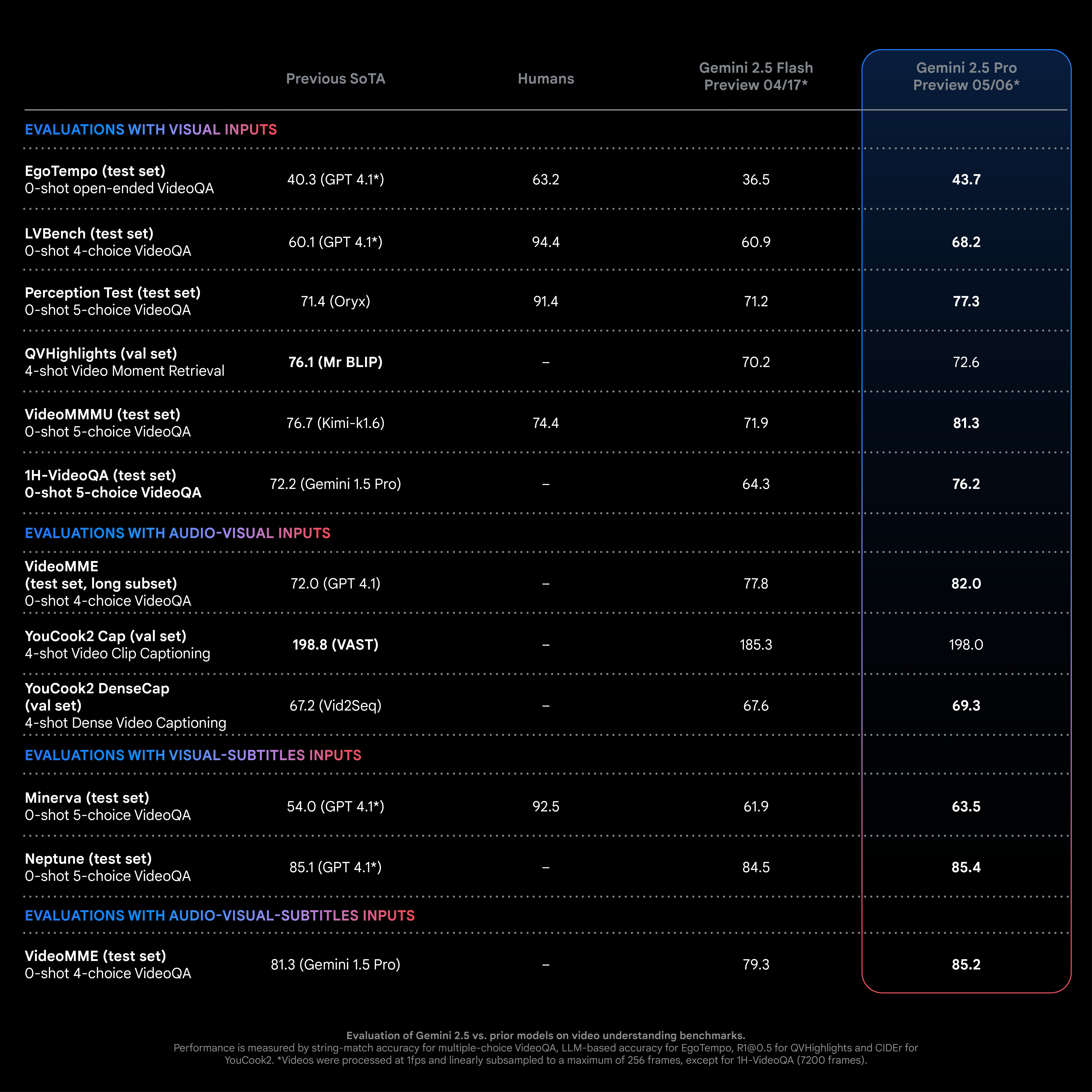

Google’s recently launched Gemini 2.5 Pro (I/O preview version 0506) and Gemini 2.5 Flash models represent the cutting edge of the current Google in the multi-model AI and, in particular,** video understanding. Gemini 2.5 is the first global ** generic multi-model model with raw video processing capabilities capable of understanding, analysing and transforming video into structured applications. ** Compared to former and similar models:**

-

** as compared to GPT-4.1**: Gemini 2.5 Pro leads multiple video understanding tasks under the same task settings and input conditions (harmonized project + video frame input).

-

** Compared to the fine-tuning specific model: ** In tasks such as YouCook2 (video-intensive subtitles), QVHighlights (video segment positioning), ** no specific fine-tuning** can approach or exceed performance.

-

Flash version: Applicable to resource-limited scenario, less costly and similar to Pro.

-

Video (up to 7200 frames/ 6 hours)

-

Audio (voice analysis, event recognition)

Text (prompt, subtitles, captions, etc.)

- Code (generator/application command)

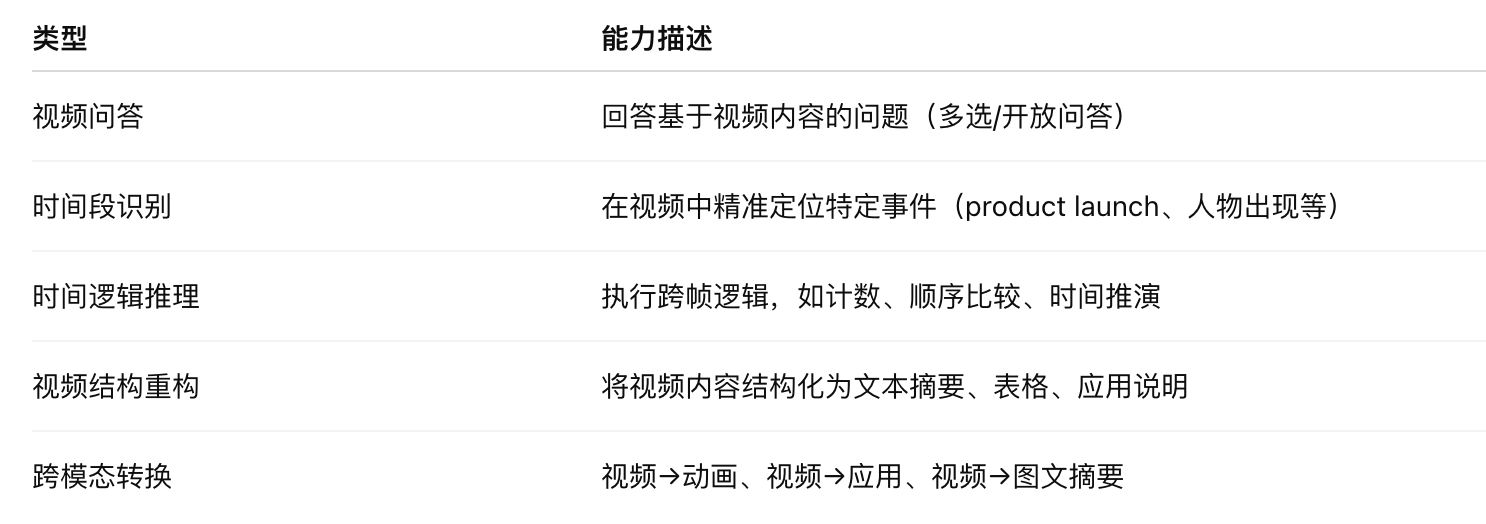

** A wide range of types of support tasks:**

Video comprehension demonstration

# 1. ** Video to Learning Application (Video App)**

Process: Enter YouTube Link + Mission Statement Model Analysis Video Generates a standard description document for learning applications Translating norms into codes (e.g. teaching applets) Application scene:

- Automatically generate educational tools such as interactive curricula, simulators, operational PPTs, etc.

2. Video p5.jsAment

Enter a video + hint (e.g., “Visible all the landmarks that appear in it”) Gemini 2.5 to analyse the video frame order and generate an animation of the JS corresponding to the content structure (based on p5.js). Application scene:

- Visualization of information, quick summary presentations, graphicization of minutes of meetings

# 3. Moment Realeval

By combining audio and video analysis, Gemini 2.5 can:

-

Accurate identification of multiple high-density segments in speeches/videos**

-

Output every starting and ending period + theme label

Example: 16 product release-related sub-snippets are automatically identified in Google Cloud Next 2025 opening video.

4. Temporal reasoning

Supports statistical and logical judgement on the occurrence of events over time** in the video. Example: Count the number of cell phones used by the main players in the video, with an accurate identification and counting of 17 times.

How to use

The video understanding function in Gemini 2.5 Flash and Pro is now available in Google AI Studio, Gemini API and Vertex AI. Gemini API and Google AI Studio provide support for YouTube videos so that anyone can build applications that can access billions of videos.

-

Support low-resolution video processing, capable of processing about 6 hours of video

-

Maximum support 2 million token context

-

Very competitive video understanding (84.7 per cent vs 85.2 per cent on VideoMME).

-

Support direct resolution of YouTube links in API

-

Adaptive education, knowledge management, automated creation, etc. SaaS development scenes

Online experience [video-to-learning application]: https://aistudio.google.com/u/1/apps/bundled/video-to-learing-app?showPreview=true