- # Behind the three AI engines: co-drive the creation process

- Critical function: not just to generate, but to direct the toolbox

- 1. Camera Controls

- Flow case show

- Veo 3 Publish Veo 2 Capability Update

- Imagen 4: New peaks in the speed and quality of image generation

- Lyria 2: Real-time music generation and control

- 1. ** high-level audio output**

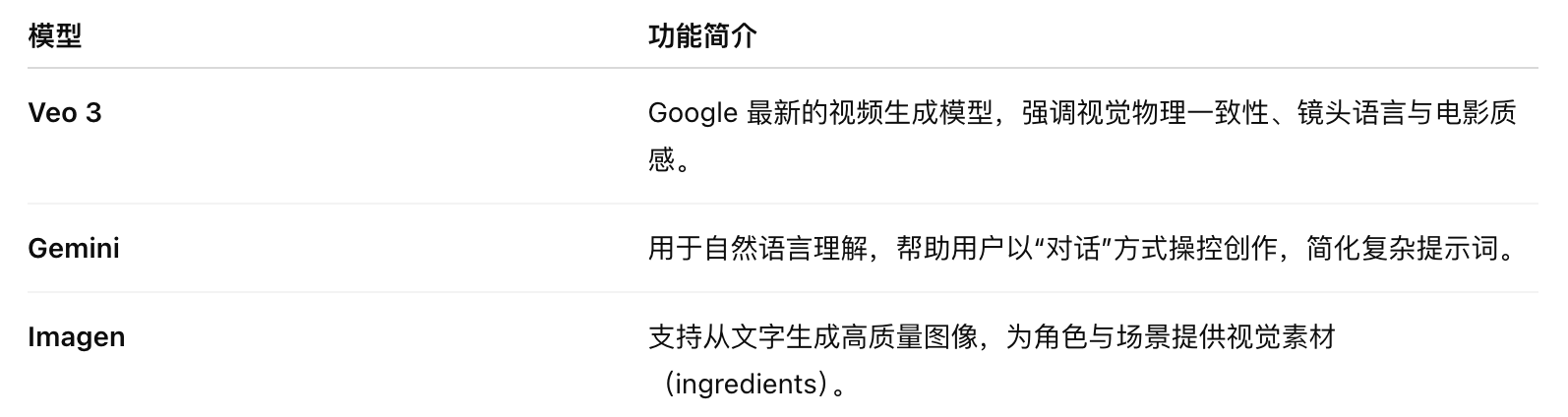

Faced with rapidly changing creative tool ecology, Google announced in 2025, at the I/O Congress, a new generation of productive media models and creative tools covering multiple dimensions of video, image, music and content traceability. Google has launched Flow - a AI film creation platform ** driven by the most advanced models within Google (including Veo, Imagen and Gemini) to help creators generate film clips in natural languages without the need for hypereffects or animation technology. It is not just another video production tool; it is a complete creation system created with film creators, dedicated to narratives and the film industry. The launch of Blow means that the transformation from ideas to film-grade images will be faster, freer and more efficient than ever. It is characterized not only by its ability to “create images, but also by its ability to ** understand the logic of the story, the syntax of the language of the lens, and the continuity of the visual style, which constitutes a fundamental difference between it and the normal AI video-generation tool. All you have to do is describe what you want, and Flow can generate a picture like a movie, control the camera, edit the scene, unify the role, and so on. It is open to subscribers to the Google AI Pro and Ultra schemes. Visit:low.google

# Behind the three AI engines: co-drive the creation process

-

Generate roles and scenes with text descriptions;

-

Support for the consistency of roles in different lenses;

-

Controlling the movement of the camera, the light and the perspective;

-

A continuous transition between scenes, supporting the concept of “cut-off”;

-

Multiple generation of content can be integrated into a complete short film structure.

A seamless transition. Camera Control Quality at the cinema level

Critical function: not just to generate, but to direct the toolbox

Flow provides not only image and video generation, but also a large number of functional modules for creative production processes:

1. Camera Controls

Users are allowed to specify manually the angles, tracks and modes of motion of the lens, such as “near-viewing mirrors”, “slow-crossing”, etc., and to achieve film language with a sense of direction.

##2. SceneBuilder Existing lenses can be expanded, or natural transitions between the scenes can be established, such as revealing more information, changing perspectives, etc.

##3. Asset Management (Asset Management) Centralize the generation of “ingredients” (roles, background, objects) and can be reused to maintain unity of style.

##4. Flow TV (Case Learning Platform) A large number of short film-based productions, accompanied by detailed instructions and production methods, have been incorporated on the platform to facilitate learning and imitation.

Flow case show

Google invited a number of forward-looking directors to work with artists in the development of thelow, three of which are described below: Dave Clark. The award-winning director, the representative of Battalion, NinjaPunk, tried to integrate AI technology, and its new film Freelancers was completed with the help of Flow and other AI tools, describing growth and adventure among separated brothers. Henry Daubrez. Digital artists, usinglow to produce a new film, “ Electric Pink “ , continue his exploration of the connection between loneliness and emotion. Junie Lau. Multi-sector creators are producing Dear Stranger — a poetic narrative on the multiplicity of parallel universes between grandmothers and granddaughters, focusing on the extension of digital identities and emotions. These cases not only demonstrate the technical capacity of thelow, but also reflect the fact that AI is deeply involved in the many dimensions of narrative logic, emotional construction and artistic aesthetics.

Veo 3 Publish Veo 2 Capability Update

Google Deepmind has officially released its most advanced video-generation model to date - Veo 3. Compared to the former generation of Veo 2, Veo 3 not only achieved qualitative leaps in image quality, physical consistency, and the compliance of hints, but also introduced new creative dimensions, including audio, to mark the entry of production video technology into the era of film-grade creation.

#Veo 3eo 3 Core Capabilities

- 4K** High Quality Output and Realization Enhancement**

-

Support origin 4K resolution output to achieve visual details of approximating professional cameras;

-

A high level of authenticity of the rules of the real world has been achieved in terms of exercise physics, light logic, material quality and so on;

-

Significant improvements in object consistency and visual accuracy between scenes, which generate images that are seamlessly embedded in real footage.

- ** Stronger hint understanding**

-

A significant increase in the number of applications in comparison to Veo 2, Veo 3prompt compliance;

-

Support for more complex descriptions of natural languages, including shot motion, emotional tone, drawing details;

-

Allowing users to express their professional director’s instructions in popular language (e.g., “in the morning, seaside, hand-held camera, faded light”);

- Creation control upgrade

-

Support for coherent action transitions between lenses**, with roles aligned with background logic;

-

The ability to construct complex scenario structures (e.g. multi-person interaction, dynamic narrative rhythm);

-

Integration ** Audio Generation function** (Veo 3 unique), introducing for the first time into a video model the ability to produce audio ** such as “environmental sound, dialogue” ** to support the generation of environmental sound and dialogue and to match different scenes with emotional atmospheres;

##Veo2 Capability Update (as compared to the first edition) Despite the shift in focus to Veo 3, Veo 2 capacity upgrades have been made, particularly in the following areas:

-

The introduction of a new Control Module (Control Modeles), which allows users to exercise more precise control over the style and detail of the content generated;

-

Improved scene consistency and the quality of long-time sequence lenses;

-

Keeping the role consistent: by providing role reference images for Veo, ensure that the role remains visible in the different scenes of the video.

-

Better integration with platforms (e.g.,low) for operational creative processes.

##Veo is now integrated into Google Labs Flow platform Typical applications include:

-

** Short film preview and shot planning** (Storyboarding & Previsualization);

-

** Virtual setup and role action simulation**;

-

The production of the concept film;

-

** Interactive narrative video generation (e.g. game scene animation)**

Veo 3 for details: https://deepmind.google/models/veo/

Imagen 4: New peaks in the speed and quality of image generation

** Keywords: 2K resolution + multi style + layout enhancement**

-

** Image mass**: significantly higher than Imagen 3 to produce precise details such as droplets, animal hair, fabric texture, etc.;

-

** Style diversity**: support for realism versus abstract style, different width ratio (print fit, social media, demonstration);

-

** Text layout enhancement**: for the first time, “Scryption and layout” has been enhanced and is suitable for visual writing in words such as cards, posters, cartoons, etc.;

-

** Use of platforms**: Gemini App, Whisk, Workspace (Docs, Slides, Vids) and Vertex AI;

-

**incoming **: Imogen 4 flash, generating 10 times the Imogen 3 speed.

Lyria 2: Real-time music generation and control

Lyria 2 is the latest release of Google Deepmind ** Music Generation Model. The objective is to generate ** high-security, professional-level audio covering a variety of musical styles, structured music songs and creative tool applications.

1. ** high-level audio output**

-

Generates audio content with ** studio quality**;

-

Support for instruments, combinations of vocals and even simulations of real performance techniques;

-

Sound quality sufficient for official distribution, background of performance or professional mix.

##2. Multi-structured, multi-style support**

-

Lyria can cover a variety of musical styles ranging from electronics, classical to hip-hop, jazz, etc.;

-

Strong consistency in dealing with the complex rhythm structure and sound formulation**;

-

Support for cross-style integration, such as “jazz element electronic music” or “songworks with African strike music”.

##3. ** Generating process designed for creators**

-

In the Music AI Sandbox tool integrated into Google, for composers, music producers and songwriters;

-

Support interactive generation and control (through MusicFX DJ or Lyria RealTime API);

-

A variety of scenes can be used to create ** draft songs, explore creative music and play live**.

** Available platforms**: YouTube Shorts, Vertex AI, AI Studio API. Lyria 2 For details: https://deepmind.google/models/lyria/