- V3 main upgrade highlights

- # 1. ** Emotion + Command + Sound *

- 2. ** Multi-player, cross-interruptment of dialogue**

- 4. ** Text to Dialogue New Mode**

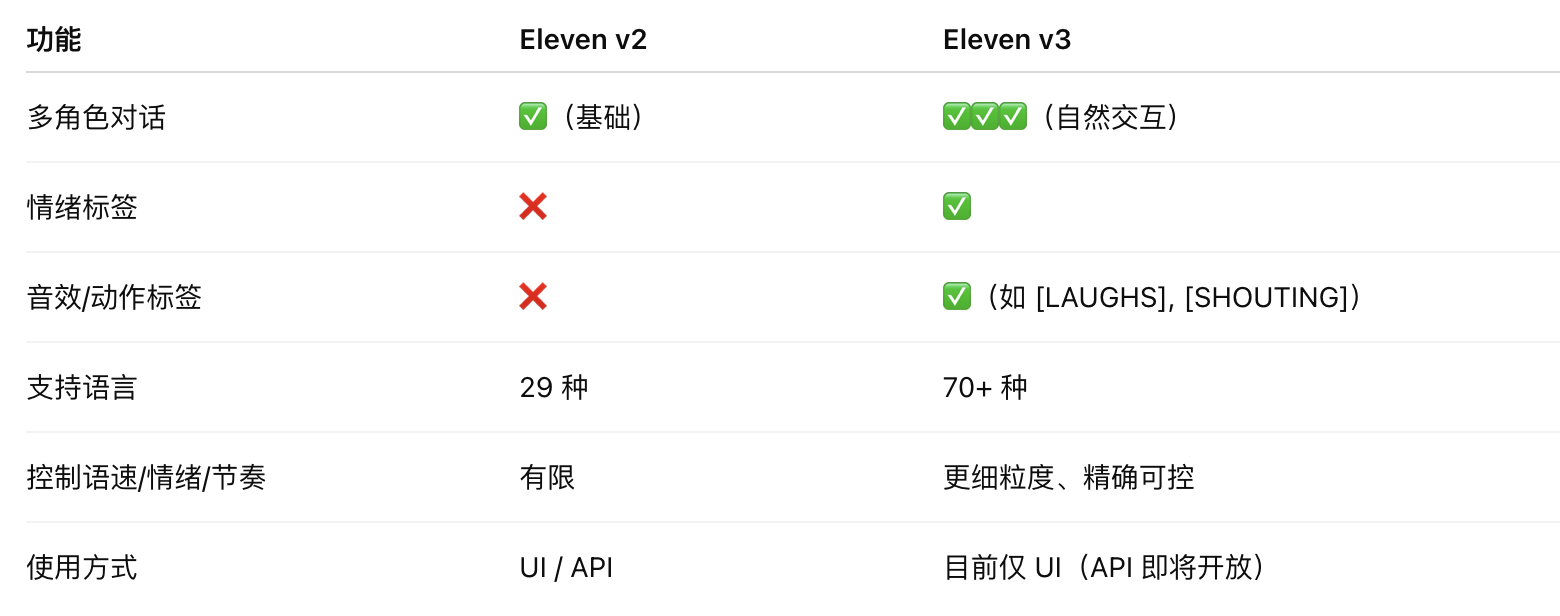

- v2 versus v3

- ** Which labels do you support?**

♪ Come on, come on, come on, come on, come on, come on ♪

Eleven Labs has launched a new generation-wide text-to-language model leven v3 (Alpha version), which is one of the most powerful TTS models at present, supporting natural dialogue between multiple languages and actors, as well as precision control of speech sentiment and non-language expression through audio labels such as [sad], [whopers], [laghs]. V3 has a stronger text-comprehensive ability to simulate interruptions, emotional changes, and tone adjustments in real conversations than in the old version. This makes it very suitable for video creation, audio book production, and media tool development. ** Features**:

-

Support 70+ Language

-

Support ** Multi-Role Dialogue**

-

Support** audio tags** (e.g. [sad], [lughs], [whisters] etc.) to control emotions and performance

-V3 is a research preview, requiring a higher level of alert work, but it’s very good at generating it.

-

The new architecture has a deeper understanding of the text and allows for a more expressive voice

-

Simulation of emotions, interruptions, tone changes in the reality dialogue**

-

I’m about to open the API interface to support creators and developers

V3 main upgrade highlights

# 1. ** Emotion + Command + Sound *

v3 Supports a new version of “audio tags”, where developers or creators can control voice:

-

Emotions. (Anger, happy, nervous, calm, etc.)

-

Emphasis and tone.

-

Pauses, speeds, mixes of sound (e.g. laughter, screams)

Example: You can generate a piece that moves from a “low voice” to a “hysteric laugh” and adds a background sound that allows the listener to immerse in it.

2. ** Multi-player, cross-interruptment of dialogue**

-

Support for natural interactions ** two or more roles**;

-

Support for synchronous context and emotional matching;

-

Interruption, talk-making, humor, etc., can be set up in the dialogue;

-

Simulation of real human dialogue scenes that are more fluid than any previous version.

3. ** Language coverage: 70+ Language support**

Compared to the 29 languages in v2, v3 currently supports more than 70 languages and covers:

-

All mainstream languages (English, Chinese, French, Spanish, Arabic, etc.)

-

Small regional languages (Sanjaro, Kyrgyz, Urdu, etc.)

Use: Non-English podcast, global sounding, localised audio content generation.

4. ** Text to Dialogue New Mode**

This is one of the most powerful capabilities in v3:

-

Automatically woven different roles, tone and sound into “dialogue audio” through plain text;

-

Without the need to mark the role or tone of each sentence, the system will automatically judge;

-

The dialogue generated is extremely active and consistent and applies to audio dramas, game dialogue, advertising, etc.

v2 versus v3

** Which labels do you support?**

-

Emotions: [ANGRY], [LAUGHS], [WHISPERS]

-

Action class: [SHUTING], [SIGHING]

-

Sound class: [EVIL LAUGH], [GIGGLE] Label detail: Promising Guide

Official presentation: https://elevenlabs.io/v3