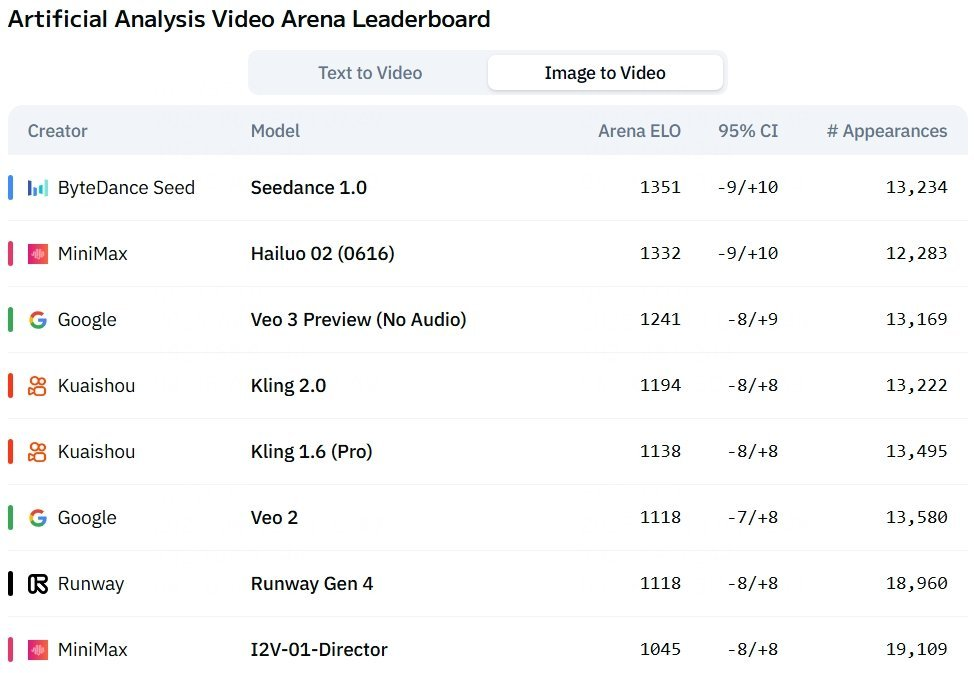

MiniMax released its latest AI video production model, Hailuo 02 (code “Kangaroo”), which ranks second in the artificial Analysis rating, after the bytes of Seadance 1.0 Pro, surpassing Google Veo 3.

-

Hailuo 02 is the only current publicly available AI primary video production model that combines paint quality, complex control and high performance;

-

Compared with major video-generated technical players such as Runway, Pika Labs, SVD, Hailuo with greater weight** real physical modelling** and ** complex action generation** for professional creation scenes such as animations, CGs, advertising, film previews, etc.;

Generate effect capabilities and properties

-

Support ** original 1080p** (1920 x 1080) resolution with a frame rate of 24-30fps, providing professional visual effects suitable for social media, advertising prototypes or short film creation.

-

An additional 768 p option is available to support 6 or 10 seconds of video, with flexibility to accommodate different needs.

** Video duration**:

-

Maximum generation of 10 seconds videos suitable for short format content, such as short social media videos, promotional segments or storyboard animations, up from the previous generation of Hailuo 01 (6 seconds).

** Advanced physical simulation**:

-

Introduction of a ground-breaking Noise-aware Compute Rehabilitation (NCR) architecture with a 2.5-fold increase in the efficiency of training and reasoning, a three-fold increase in the number of participants and a four-fold increase in the size of the data sets.

-

Be good at handling complex physical scenes, such as gymnastics, fluid dynamics, collision detection and material interaction, producing natural, real motor effects. For example, they can accurately present explosions, fabric edges or reflective light.

** Directive compliance and creative control**:

-

Support** multi-language text tips** (including English, Chinese, etc.) that allow for the accurate interpretation of complex instructions and the generation of videos consistent with user intentions.

-

Provides camera command functionality, which allows users to specify the movement of the lens (e.g. smoothing, tilting, pushing) to produce a filmization effect similar to that of a professional Steadicam.

-

Strong role consistency, through advanced facial recognition and body tracking techniques, to ensure consistency in character, clothing and features in all frames.

** Generation mode**:

-

** Text-to-Video (T2V)**: A video based on a text description is suitable for writing from scratch.

-

** Image-to-Video (I2V)**: Animation of static images, preservation of art style or details, applicable to the conversion of illustrations or photographs into dynamic content.

-

** Subject-to-Vision (S2V)**: Role-consistency based on reference images, suitable for multiple lenses or continuous scenes.

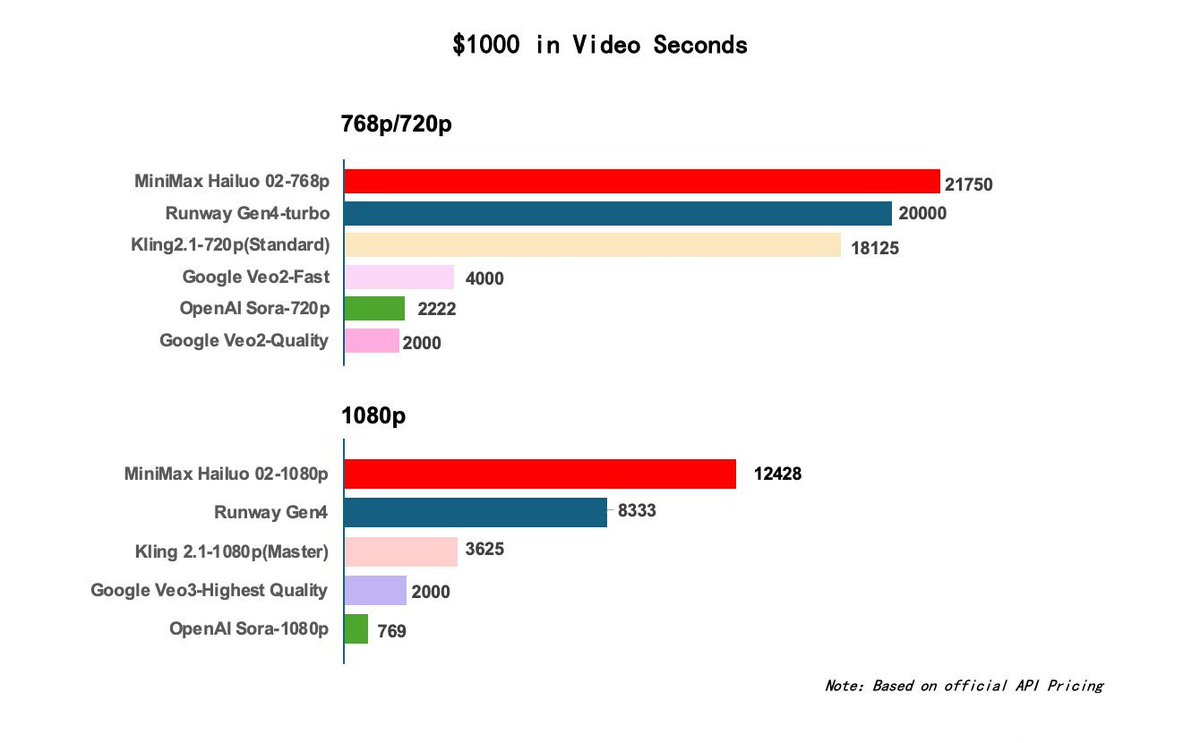

Cost strategy:

-

Because of the significant efficiency gains in the model, Hailuo 02, while maintaining high quality: (a) No escalation or reversal of costs;

-

High value for money compared to the same products in the industry (e.g. Runway, Pika, SVD);

Pricing is highly competitive, generating video costs of approximately US$ 0.28-0.56 per second for 5-10 seconds **, 1080 p video about US$ **0.08 per second **, which is more economical than many international competitors (e.g. Seadance 1.0 Pro).

The generation time is approximately **30-60 seconds (depending on complexity), and some platforms (e.g. GoEnhance) claim to deliver high-resolution videos within the fastest 90 seconds.

Official commitment:** There are no high threshold restrictions on the use of creators** and technology should be inclusive.

Technological innovation

The release of Hailuo 02 is not just an upgrade of a simple version, but a technological leap across multiple dimensions.

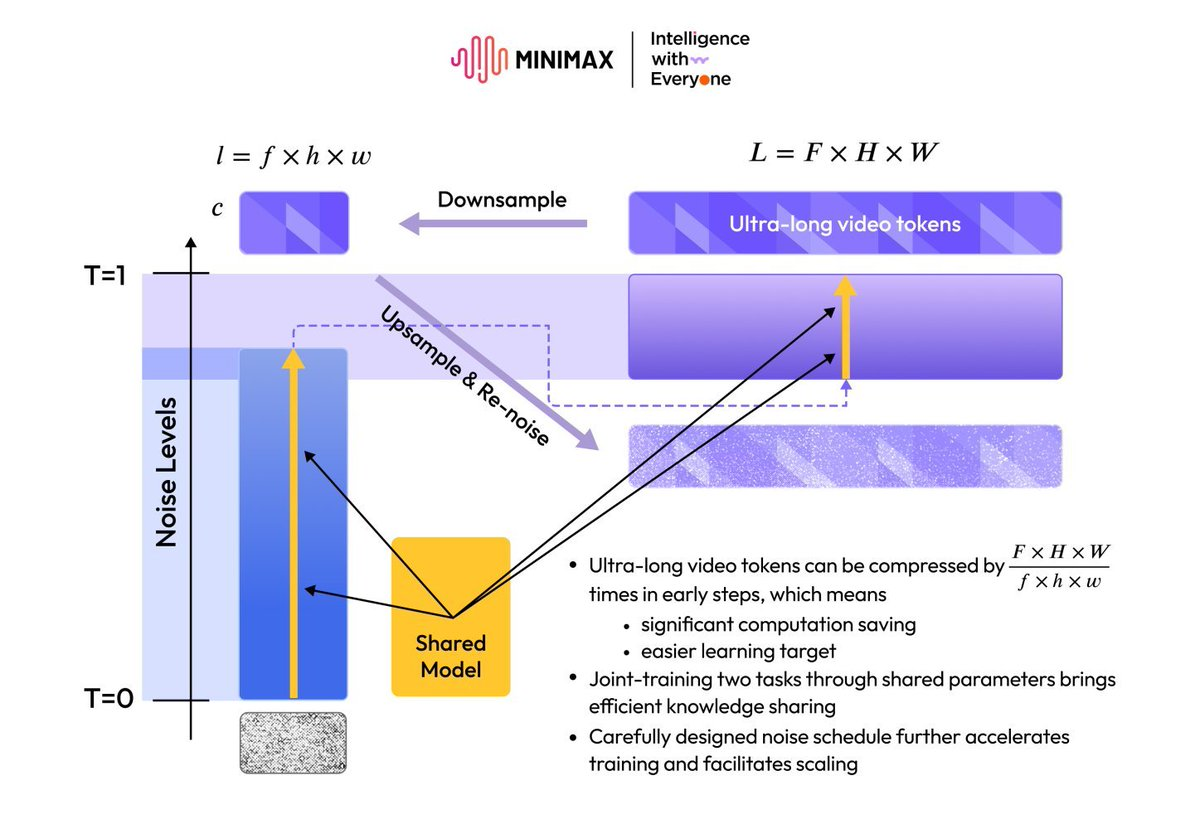

#1 Structural innovation: NCR (Noise-aware Commute Reform) Rationale:

-

“Calculated redistribution of noise perception”, which is an efficient method of training and reasoning distribution;

-

Relocation of computational resource dynamics to a more meaningful place in model learning tasks and avoidance of waste of resources;

Outcomes:

-

** 2.5 times more efficient training and reasoning**;

-

Models with a larger number of operational parameters under equivalent hardware resources;

-

Provide sufficient room for further reasoning optimization,** which could still be accelerated in the future**

##2 Model size: ** Arguments expanded to 3 times the previous generation**

-

A larger model would mean: Greater image detail generation capacity;

-

more complex semantic understanding and implementation;

-

A more stable and coherent logic of performance;

Thanks to the increased computing efficiency of the NCR, this expansion has not led to cost increases, but has been better controlled.

##3 Data size and quality enhancement: ** Training data x 4 times**

-

The data are broader in scope and include: (a) Complex character movements;

-

Changes in the light in the writing environment;

-

Video content in different cultural and linguistic contexts;

Data diversity has increased significantly and has significantly increased the ability to generalize models; Build high-quality tag data sets in conjunction with Hailuo 01 use feedback to achieve more refined target-driven training.

#4 command following ability: State-of-the-Art Instruction Monitoring

-

Accurate response to more complex natural language tips;

-

The performance of multi-part description, abstraction, motion control tips was excellent;

-

In the tests, ** movement coordination, continuity, physical rationality in a complex scenario has increased significantly**.