- Core function bright spot

- # Typical Uses

- # System architecture and security model

- To be published and planned functionality

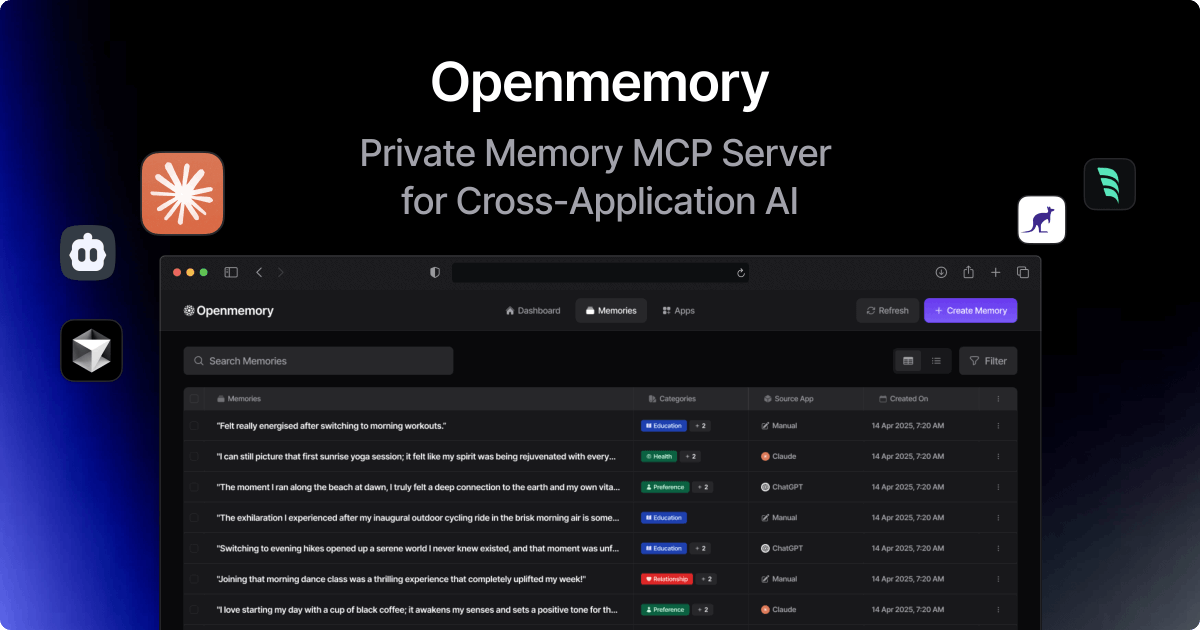

OpenMemory MCP is a locally run application for the storage, organization and management of your “ memory “ to carry context between different AI tools. It proposes solutions to the three main difficulties of the current LLM tool: “Remembrances of Inconsistence”, “Deficit context”, and “Inadequacy of privacy”:

-

To provide a unified, structured and privately owned “memory layer”

-

Localization of memory management, privacy security

-

User-led data flow through permission models

-

Support extension for multi-category AI client ecology

It allows you to use historical information, dialogue style, preferences and project status on a continuous basis between Claude, Cursor, Windsurf, and other LLM tools, and thus achieve a true cross-platform, continuous AI interactive experience. It can be understood that:** for the first time your A.I. personal assistant has a truly “you” permanent memory module, which can also be called back and forth between various AIs, while ensuring that privacy and control are in the hands of users**.

Core function bright spot

# Typical Uses

** Project context transmission**

- You still have access to design details, constraints and requirements when you discuss an API design in Claude and move to Cursor code.

** Debug track records**

- MCP automatically records how you’ve checked certain types of bugs in the past, and AI can make proposals on the basis of these models.

Prompt Historical Memory

- Storage of your alert style in different tasks so that different tools can imitate or continue the style.

** Highlights and memories of the session**

- Recording the summaries of past meetings and your feedback, which AI can quote when generating a document or summing up.

** Log of product evolution**

- Recording the entire process of achieving the feedback from functional requirements, AI supporting retrospective and iterative.

# System architecture and security model

Local priority (Local-First):

-

All data are only available locally by default and can be operated without network connections;

-

There will be no automatic synchronization to clouds unless the user takes the initiative to export or share the operation.

• Auditable (Permission-Based Access):

-

Each AI tool reading/writing memory requires explicit authorization;

-

Users can view detailed access logs and data uses.

To be published and planned functionality