- Core functions and characteristics

- I’ll be right back

- Limitations

- Compared to competition

- How to generate the video

- # output resolution and video dimensions

- # # video settings

- # # video play and expand

- # # video mode description

- # Use uploading requirements (compliance)

Midjourney has officially launched the first AI video generation model - V1 Video Model, which is now open to subscribers. Supports video animations that generate single images for 5 seconds, allows users to choose whether to add a hint (prompt) and to use the animated effect to present the visual experience of the image “move”.

-

** Generate video from static images**: Users can upload their own images or image from Midjourney, with one key generating four 5-second clips

-

extencable to 20–21 seconds: After initial 5 seconds, up to four additional 4 seconds per video segment, up to 20 (or 21) seconds

-

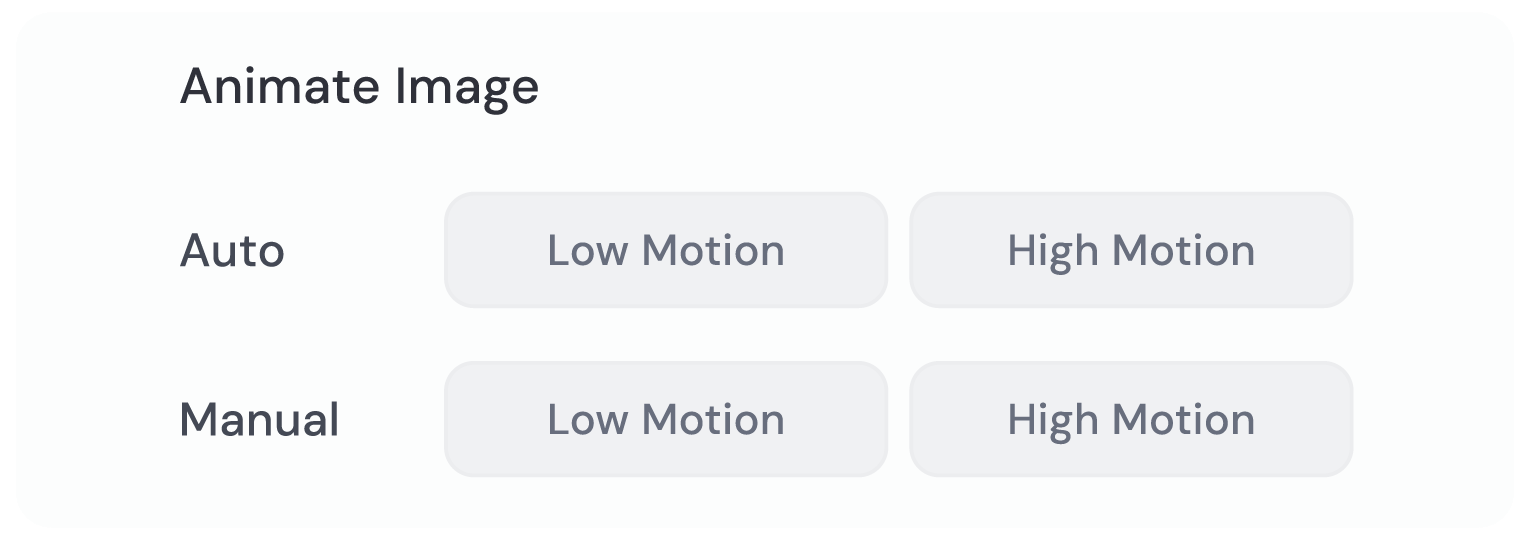

** Animation Control**: supports two mode of motion: Low Motion: Fits for micro-activities in static settings.

-

High Motion: for larger cameras or role movements.

-

You can choose animating the image automatically or adjusting it through a texttip.

Midjoourney states that its AI video model aims much more than generating B-roll material for Hollywood films or making commercial advertisements for the advertising industry. Midjoourney’s CEO, David Holz, says that the AI video model is the next step towards the company’s ultimate goal of creating an AI model that “can open the world in real time”. Midjourney, the long-term objective is to build an open world simulation system ** produced in real time**:

-

Users can move freely in 3D space;

-

scenes and roles are ** dynamic and interactive**;

-

The AI system is capable of generating images and responding to user operations at real-time speed.

To achieve this vision, Midjourney will progressively build the following key technical modules: Image model (completed) Video model (now rolled out) 3D Space Interactive Model (forthcoming) Real-time performance optimization models (future targets)

Core functions and characteristics

** Image-to-video generation**:

- Users can generate a static image (or upload an external image) from the Midjourney platform and then convert it to video using the Animate button. This process creates an ecologically seamless connection with Midjourney ‘ s image, supporting direct video generation from text.

-

Provision of two main models: ** Automotive synthesis**: Automotive effects generated by AI.

- ** Custom motion hint**: Users can describe the movement of elements in the control scenario by text, e.g. by specifying the movement of the lens or the dynamics of the object.

-

The current model produces a shorter video length with a maximum of 20 seconds.

-

Support for video extensions, with additional 4 seconds per extension, to provide a degree of flexibility to users.

** High and low dynamics options**:

-

Provision of “high-dynamic” and “low-dynamic” switches to generate faster or slower-paced videos, respectively, adapted to different creative needs.

** Cost and accessibility**:

-

Pricing at $10/month, high value of value and positioning as a “one-person” video-generation tool.

-

According to user feedback, the generation of 20 4-second videos (80 seconds in total) consumes approximately one “last hour” at a cost of approximately $4, which is more price-effective than the Veo 3 of the competitor such as Google.

** Paint quality and style**:

-

The video inherited the high quality and artistic style of Midjourney in image generation, each of which had the beauty of drawings, as if the dynamic picture had been taught by the director.

-

Emphasis** on high-fiscal visual effects**, but current video resolution and details may be comparable to top competitors (e.g. Dream Machine of Luma Labs or Hailuo 02 of MiniMax) with no significant lead.

I’ll be right back

Limitations

No audio generation:

- Unlike Dream Machine of Google Veo 3 or Luma Labs, Midjourney ‘ s video model does not support the generation of audio orbits or environmental sound, and users need to add tracks manually at a later stage.

** Duration and editorial limitations**:

- Video duration is limited to 20 seconds and does not support the continuity of time-axis editing, scenario transition or cross-sections, with lower functionality.

** Generation speed**:

- Rendering time may be slower and less efficient in dealing with complex sports or cinematography than that of some competitors (e.g. Hailu 02 of MiniMax).

Compared to competition

Midjourney entered a highly competitive AI video generation market and faced challenges from rivals such as Runway, Luma Labs, Google Veo 3, MiniMax Hailuo 02:

-

** Strength**: Midjourney relies on its mature image-generation technology and user-friendly interfaces to provide seamless graphics to video workflows at lower cost and suitable for short video experiments and creative exploration.

-

** Disadvantage**: Lack of audio-generated, shorter video durations and limited editing functions that make them less functionally comprehensive than the products of Runway or Luma Labs.

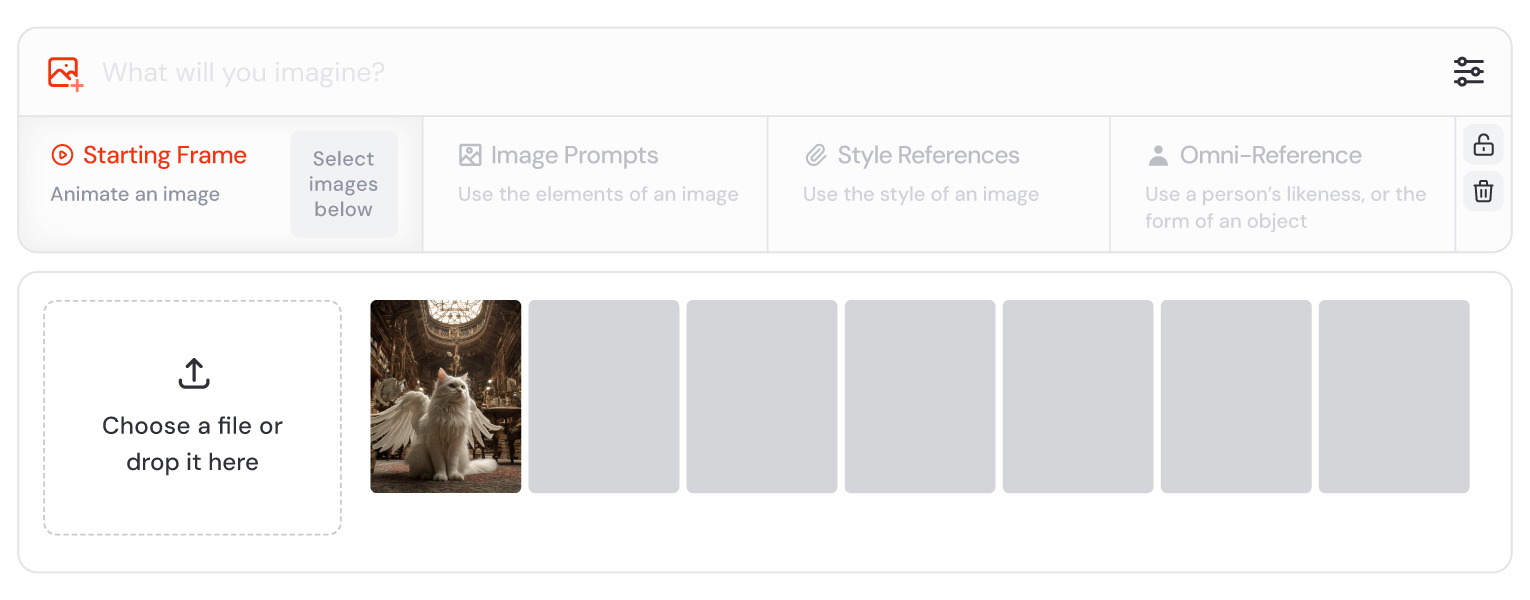

How to generate the video

- Use of images generated by Midjourney

-

When the image is opened in the library, click on the Animaté Image button to: Auto: Automation of video using original diagrams;

-

Manual: Allows the editing of hints before generation;

The original parameters used to generate the image are automatically removed, without affecting video generation; The shortcut entry button is also shown on the image that is suspended on the “ Create “ page. Use of images uploaded by users

-

Uploading images or selecting existing images by Imagine icon;

-

To drag images to the Staring Frame area as a starting frame;

-

Locking images allows multiple hints to be repeated;

-

The use of images as a “start frame” is limited, and other references such as “Style Regulation” “Image Project” do not support video generation.

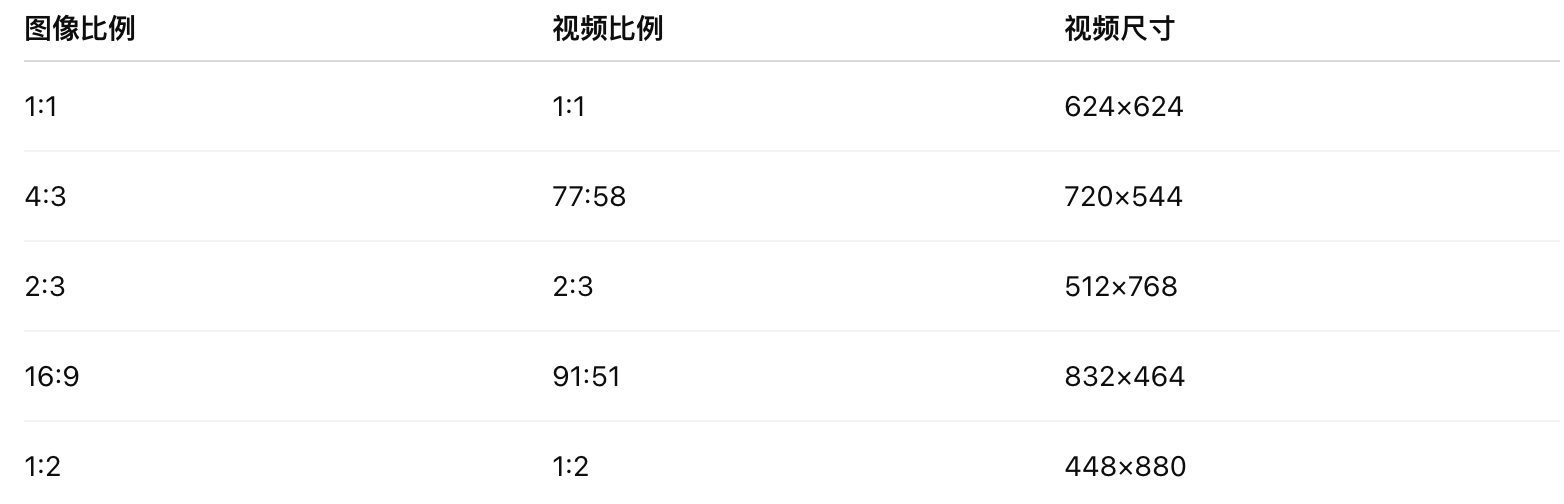

# output resolution and video dimensions

The resulting video resolution is fixed at 480p (standard resolution) and the actual size is influenced by the width ratio of the initial image:

# # video settings

Click the settings icon in the ‘Imagine ‘bar to access:

-

Default motion mode settings;

-

GPU use speed control;

-

Stewart Mode (hidden mode);

Note: Only Pro and Mega users support the production of videos under Relax Mode.

# # video play and expand

Playback Control

-

Suspend automatic play on the “Create “ page;

-

Video progress control (scrubbing) can be achieved by moving Ctrl/Command+Mouse.

Extension of video (up to 21 seconds) ** 4 seconds per extension, up to 4 times, for a total of ** 21 seconds**.

-

Extend Auto: continue automatically with the original hint;

-

Extend Manual: A reminder can be edited before extension.

# # video mode description

-

–motion low (default): minor movements, static scenes, slow moves, movements of small players;

-

–motion high: large-scale camera movement, visible role movements (possibly causing unnatural or unreal phenomena);

–raw: Turn off the system to optimize its style and respond more accurately to hint control.

# Use uploading requirements (compliance)

-

Legal access to uploading images;

-

Prohibition of the production of insulting, pornographic or inflammatory videos, especially against real persons;

-

The system automatically filters the content of the violation ** the intercepted video does not deduct GPU time**;

-

If it does not agree, it is recommended that external images not be used to generate video.

Official guide: https://docs.midjourney.com/hc/en-us/articles/374707868589-Video