The world’s first AI native film and television studio has emerged, with project revenue reaching as high as 110 million US dollars!

It’s called Utopai Studios.

The current popularity of AI is at its peak, and the forces that use AI to enter the film industry are mainly divided into two factions:

One faction is the “tool faction” represented by Runway and Pika, focusing on the tool attributes of AI, with the core focus on improving the efficiency of the film and television production process.

The other faction is the “content+AI” companies, which mainly promote the application and distribution of AI in the narrative innovation and industrialization of content, effectively reaching into the most fertile profit zone of the film and television industry, “content production+industry landing”.

The positioning of these two types of companies determines their different ceilings.

The former is more inclined towards improving the efficiency of the tool layer, characterized by high technical barriers and the ability to continuously iterate and generate models. However, the business model is often limited by the logic of tool based SaaS (i.e. subscription fees, API call fees, B-end authorization fees), and may eventually become an “infrastructure company” in the film industry, or be easily replaced by more powerful generic models in the future.

The latter is positioned to create new narrative forms and distribution, which gives it the opportunity to directly enter into IP, copyright, and distribution channels, forming a “content+channel+AI technology” trinity moat. If it can successfully break through, the ceiling will be much higher than that of pure instrumentalism, because it has the opportunity to change the entire industry chain model of the film and television industry, rather than just making local efficiency improvements.

Interestingly, Utopai Studios falls under the second category of companies.

Before Utopai’s entry, the field was almost blank, with few companies attempting to integrate AI technology paradigms with film and television content and industry operations.

Utopai not only took the lead in entering the market, but has even proven its model in market validation. Its two upcoming film projects have already brought in $110 million in revenue.

So, what exactly is the origin of such a company?

It is not entirely accurate to say that it has “emerged”. The company was actually founded in 2022, formerly known as Cybever, a 3D generative AI company. It gained some fame in Silicon Valley earlier with its technology of “using AI to generate high-precision 3D virtual environments”.

And it is precisely from the starting point of AI+3D that they have seen a bigger vision: no longer satisfied with being a technology supplier, but to turn around and face content directly, using AI to explore new possibilities for the traditional film and television industry.

##Transformation

Utopai was founded by two Chinese founders of Google:

Co founder and CEO Cecilia Shen, born in the 2000s, was addicted to robot experiments as a child and studied mathematics at the University of Waterloo in Canada. In my sophomore year of college, I entered Google X Lab, the most mysterious department of Google, and participated in the Moonshot project. Here, he met Jie Yang, another co-founder of Cybever.

Co founder and Chief Technology Officer Jie Yang, formerly a scientist at Google Research, later joined Alphabet as Head of Research. He has a profound accumulation in the field of AI image modeling and generation technology, and was also a major promoter of CyBever’s early 3D engine architecture.

When Cybever first started in 2022, the team’s vision was to solve the long-standing efficiency bottleneck problem in professional 3D. As most of the 3D industry’s clients come from the film and gaming industry, Cybever mainly focuses on generating high-precision 3D virtual environments, providing a scene “foundation” for the gaming and film industries.

But soon, Cecilia realized the limitations of “visual effects” companies - very, very low profits. Cecilia realized that the company had to move upstream in the industry chain in order to have pricing power and a high profit business model.

In other words, Cecilia attempted to bypass the conventional path of AI tool companies such as Runway and Luma, and instead chose to directly engage in the production and global distribution of film and entertainment content, becoming the content owner of the AI era.

The story that followed, unlike most fast-paced AI projects, Utopai embarked on an extremely rare and systematic evolutionary path - during the three-year period from 2022 to 2025, the two founders built a structured syntax for AI driven content production in four stages, positioning themselves precisely at the intersection of AI and content value:

**Stage 1 is to move from spatial grammar to ‘content perpetual motion’. **

Due to Cybever’s strategic choice of using Procedural Content Generation (PCG) to generate content, which was different from popular model companies such as NeRF and 3DGS at the beginning of its establishment, this strategic choice ultimately laid a key foundation for Utopai and became its stable underlying asset generation capability.

Because the advantage of PCG lies not in its generation power, but in quality control, topological integrity, and industrial compatibility. At present, Utopai’s system can automatically generate thousands of high-precision 3D assets and match them with different lighting conditions (dawn, dusk, shadows), camera parameters (wide-angle, telephoto), and weather conditions (sunny days, rainy nights, haze); Each combination has an “absolute truth value” that tightly binds geometric information with 2D visual data, providing a standardized input space for subsequent AI understanding and generation.

**Stage 2 refers to encoding “spatial intelligence” into “syntax rules”. **

Due to the PCG mode’s ability to randomly place objects, its weakness is that when the generated objects become spaces that come from functional logic and spatial order, such as urban blocks or indoor spaces, problems may occur.

This made Cecilia realize that it was necessary to enable AI models to build a ‘structural’ ability, namely ‘spatial syntax’. At this stage, through continuous training and scene modeling, its AI model begins to understand implicit rules in space, because AI can not only generate, but also think, which enables it to not only restore the visual logic of the real world, but also simulate human behavior expectations in space. And this ability also gives Utopai unprecedented structural beauty and functional rationality in generating content.

**And in Stage 3, the AI agent came on stage. At this stage, Utopai further developed the AI Agent system. **

This is an AI creative director with design intuition. It can be said that the Agent system no longer only responds to keyword commands, but can understand vague, abstract, and emotionally charged creative instructions.

For example, when a creator inputs “I want an Eastern alley on a rainy night, with a bit of cyberpunk style and a sense of loneliness like a detective movie,” the agent does not stop at understanding style filters, but can generate a “world with intention. For example, peeling Chinese posters on the wall, neon lights reflected in puddles, and moisture lingering in the air - all of these are details that have never been directly requested.

More importantly, the process output by the agent is not an inspiration sketch, but a complete 3D preview asset * (Pre viz) *, which directly saves tens of hours or even tens of thousands of yuan in labor and rendering costs for film and television production.

And in Stage 4, which is the first half of this year, Utopai made a crucial leap from a tool company to a commercial closed loop.

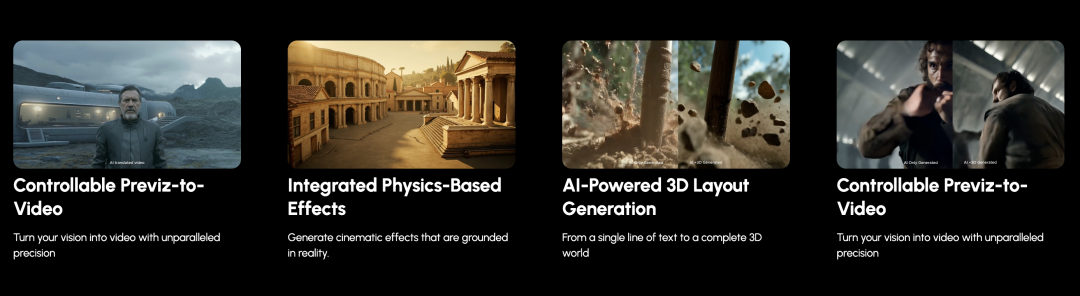

At this stage, Utopai completed the industrial closed-loop of Previz to Video.

Simply put, Utopai has consolidated all four stages of capabilities into a complete video production workflow - “Preview to Video Pipeline”, and ultimately solved the three biggest challenges in AI generated videos: consistency, controllability, and narrative continuity.

That is to say, in the future, directors will no longer need to wait for days, but can preview shots of near film quality in just a few minutes, and even achieve “improvisational shooting style” creative iterations. This is not just an efficiency revolution, but also a qualitative change in the ability to experiment with content.

##Dreams and confidence

So, how did Utopai solve the three major technical challenges of “consistency,” “controllability,” and “narrative continuity” in the current AI video generation field?

This issue is important because although AI video generation can produce stunning images, it cannot truly enter industrial scenarios such as movies and TV dramas without providing solutions to these three major challenges.

Firstly, in a recent interview, Cecilia pointed out the biggest problem with current AI general models to the point. She pointed out that:

At present, universal video models are designed to serve the public, with one of the core goals being to optimize efficiency and enable mass users to obtain “good enough” results at the fastest speed, not limited to film and television. But this often comes at the cost of sacrificing image quality.

And it is precisely for this reason that Cecilia answered Utopai’s key question about the model as follows:

Simply put, Utopai’s model is only designed for professional film and television creators who have the ultimate pursuit of quality, and this group is willing to wait longer for better results. Due to this clear positioning, Utopai has also freed itself from efficiency constraints and can reduce compression ratios when training models, increase training data in specific directions, adopt more and stronger attention encoding mechanisms, train larger, better, and more focused models, and invest all computing resources in polishing image quality to ensure that every frame can withstand the test of the big screen.

Secondly, the so-called “consistency” problem that troubles the field of AI video generation refers to the difficulty in ensuring that the appearance, actions, and scene elements of characters remain consistent in different shots, which can lead to “drift” between characters and the environment.

For example, Veo3, Runway, and others are prone to “drift” in facial features, clothing, lighting, and even environmental details during frame by frame generation. For example, if the protagonist wears glasses in the first second, they disappear the next second.

This is currently a nightmare for all models in complex scenarios, “Cecilia said. For example, when multiple characters are moving and interacting simultaneously, and the camera is constantly moving, existing models generally cannot handle it well, often resulting in the illusion of characters sticking to each other, merging, or actions that violate physical laws.

Cecilia pointed out, “We believe that the root of these two problems actually lies in the lack of understanding of the three-dimensional world by the model. Due to the nature of video being 2D, this leads to most models only imitating and compressing pixels on the 2D plane. ”

And this is precisely the special feature of the Utopai model, which comes from high-precision 3D technology. During the training process, Utopai injects 3D data with physical laws, making the model no longer just a representation of learning 2D images. This fundamentally enhances the model’s understanding of space, occlusion, and collision, avoiding the problem of illusion that is inconsistent with the physical world. Because we have been creating high-precision 3D environments with AI for a long time, this part is actually our DNA, “Cecilia said.

It’s called ‘controllability’. This challenge refers to whether users can accurately control the generated results, such as character expressions, action paths, camera angles, rhythms, etc., like a director.

Due to the fact that current AI video generation mostly relies on “Prompt+random sampling”, it has a certain degree of randomness. In this regard, users can input the “general direction”, but it is very difficult to control micro details (such as making the character turn their head 45 degrees or walk to a certain position). The lack of controllability means that creators find it difficult to view AI videos as “predictable production tools” and can only serve as “inspiration generators”.

Cecilia pointed out the relevance of this issue to workflow. “Currently, the common creative process in the industry relies on a large amount of ‘gacha’, which repeatedly generates massive amounts of content and then selects a few results that are close to creativity. However, this is unacceptable in professional production because directors have pixel level precision requirements for every image, from the layout of the square to the position of the water cup, from the strength of the light to the angle of the character’s gaze. In the ‘gacha’ mode, it is often the element in the lens that fits, and another one deviates, making the creative process full of randomness and frustration.

Utopai’s solution to this is to replace random generation and selection with executing the director’s intention with certainty.

According to Cecilia, the Utopai workflow allows directors to quickly and accurately depict a clear draft through storyboards, 3D Previz, and other methods. This draft is not only a visual reference, but also contains structured instructions for the director’s core intentions. Subsequently, Utopai’s model and workflow will accurately understand the intention and, combined with the overall artistic style of the film, automatically and directionally attempt and adjust towards the ultimate goal. This is precisely where technologies such as reinforcement learning and intelligent agents excel.

This may be the fundamental difference between Utopai and many other ‘tech disruptors’, as the core of its system design is not to replace directors and artists. On the contrary, the core of its system is to liberate directors and artists from the shackles of industry and return them to the creative throne.

And this also represents Cecilia’s strong aesthetic tendency. Cecilia said that AI can generate infinite options, but taste is always defined by people who can tell stories and have artistic aesthetics. The deeper significance of Utopai lies in its system design, which pursues a symbiotic evolutionary relationship between humans and AI.

For this, Cecilia set Utopai’s North Star, which is to create a personalized, end-to-end AI architecture for film and television production. **Through its highly integrated AI models and automated workflows, the production costs of movies and content have been significantly reduced, freeing thousands of filmmakers from the shackles of budget and enabling them to turn stories from scripts into high-quality visual works at unprecedented speed and extremely low cost. And none of this comes at the cost of sacrificing quality.

From an internal perspective, it is to build a “software and hardware integrated” architecture that combines data, models, and workflows.

Cecilia pointed out that a common problem with AI movies and TV shows now is that models and workflows are seen as two independent links, separated from each other. On the one hand, the model company is responsible for improving algorithms, while on the other hand, the production company only focuses on process optimization, lacking deep collaborative evolution between the two.

At the same time, Cecilia also emphasized the importance of content quality. She believes that quality must be prioritized.

The audience’s eyes are like rulers. Sometimes, people underestimate the impact of details. In fact, we have found that every artist or creator is like a child, and they are not resistant to technology. One example is Toy Story, released in 1995, which was the world’s first digitally produced animated feature film. It was a profound leap forward in both creativity and technology, and earned nearly $400 million in box office revenue worldwide. So, is the so-called resistance to AI because we are using AI in the wrong way?

And this brings us back to the issue of details and quality. Technology creates conditions for creating higher quality products, and consumers are not willing to lower their pursuit of quality and storytelling just because of AI.

##Advantages

The final question is: So, what are the advantages of Utopai in achieving this North Star?

Firstly, Utopai has already generated $110 million in revenue, which is a clear proof of its advantage, as it demonstrates Utopai’s strategic integration with Hollywood’s content and ecosystem.

To achieve this, firstly, Utopai has thrown out two ace cards in the film and television industry:

One of them is “Cort é s,” which Hollywood has called “the most difficult epic to film in history,” and has always been the most talked about film in Hollywood, but has consistently ranked among the top 10 films that have not been made. The reason why this movie is difficult to make is that it is too expensive to produce using traditional film methods. However, Utopai, who is not afraid of technology, invited Oscar nominated screenwriter Nicholas Kazan to write for it and concept designer Kirk Petruccilli, who is ranked 49th in Hollywood, to direct it.

Another ace is the eight episode science fiction series’ Project Space ‘, which is widely described as’ when’ Top Gun ‘meets’ World War’. This is also a big deal, Utopai invited famous screenwriters Vanessa Coifman and Martin Weisz to write for it, and hired Martin Weisz to direct it. The drama has been successfully pre sold in the European market.

To make AI native movies and TV shows in Hollywood, the starting point must be high and the methods must be new, “Cecilia said.

In addition, in order to release the project, Utopai also established a joint venture with K5 International, the sales company for “Dancing with Wolves” and “Horizon: American Legends”. The latter will represent all Utopai projects on MIPCOM and AFM this autumn. Utopai has also partnered with OPSIS, a visualization company known for “Game of Thrones” and “Captain America,” to integrate its processes into a friendly workflow for filmmakers.

It should be noted that these two dramas have generated approximately $110 million in revenue for Utopai worldwide. And this number is unmatched by other AI studios, and it also made Utopai a hit. Before its first movie was released, Utopai had already entered the ranks of Hollywood’s top tier big productions.

In addition, all GenAI companies are eyeing Hollywood connections, but so far no company has announced similar plans. Recently, Aesteria (now acquired by Moonvalley AI) announced plans to produce “uncanny valley”, while Runway and Luma also heavily promote their Hollywood networks. However, no company has announced similar plans in the field of film content AI.

Little did they know that Utopai’s story was featured in the famous magazine Forbes as early as the end of April this year. In August of this year, Forbes exclusively reported on their story, stating that “Utopai’s story marks a shift in the positioning of AI companies in the media industry. They do not intend to sell models or APIs to the studio system, but believe that the real value lies in creating and owning intellectual property. This model is similar to Pixar’s approach of transforming its graphic tools into narrative engines. ”

Of course, Utopai’s advantages also include its self-developed underlying models and data. But Cecilia is currently unwilling to say more about these two issues.

What Utopai is doing is to break down the fence of imagination and rescue movies from the tyranny of budget. This is not just an upgrade in technology, but also a revolution about creative freedom. And all of this has just begun, let the bullets fly a little longer.